I. Introduction: Redefining AI’s Frontier

The landscape of artificial intelligence is undergoing a profound transformation, shifting from a predominantly cloud-centric model to one where sophisticated AI capabilities reside directly on end-user devices. This paradigm, known as on-device AI, local AI, or edge AI, represents a significant evolution in how intelligent systems interact with the world. Unlike traditional cloud-based AI, which necessitates sending data to remote servers for processing and retrieval, on-device AI executes features and models directly on devices such as smartphones, tablets, laptops, wearables, or even home appliances. This fundamental difference eliminates the constant reliance on cloud infrastructure, allowing AI to perform inference and even continuous training directly on the device, precisely where the data is generated.

This architectural shift from centralized, server-dependent computing to a decentralized, device-centric model marks a pivotal moment in technology. It requires a re-evaluation of network architectures, data governance frameworks, and user interaction models, extending far beyond the mere implementation of new features. The implications span from how data is managed to how applications are designed, fundamentally altering the relationship between users, their devices, and artificial intelligence.

The move towards on-device AI is compelled by several critical advantages over its cloud-based counterparts. A primary driver is the significant reduction in latency. By processing data directly on the device, the time-consuming round trip to distant servers is eliminated, enabling real-time decision-making that is crucial for applications such as voice assistants, autonomous navigation systems, or health monitoring tools. This immediacy translates into a smoother, more responsive user experience.

Another paramount advantage is enhanced privacy and security. Sensitive data remains localized on the device, minimizing the risks associated with data transmission to external servers and aligning with critical data minimization principles. This approach means that personal information is less exposed to potential breaches or unauthorized access during transit or storage in centralized data centers.

Furthermore, energy efficiency serves as a substantial impetus for this shift. Local processing significantly reduces the demand for energy-intensive data centers and extensive network transfers, contributing to environmental sustainability and lowering operational costs. The energy consumption of AI workloads in data centers is projected to surge dramatically, prompting a search for more sustainable alternatives. AI chips designed for on-device processing prioritize energy efficiency, leading to a 100 to 1,000-fold reduction in energy consumption per AI task compared to cloud-based AI. This is not merely about extending battery life but addressing a broader energy challenge, indicating that companies adopting edge-first models can achieve competitive advantages by aligning with both business and environmental goals.

Finally, on-device AI enables robust offline functionality, allowing applications to operate seamlessly even in environments with poor or no internet connectivity. This report will delve into the practical implementation and implications of AI directly on personal computing devices, specifically laptops, tablets, and smartphones, which are increasingly equipped with specialized hardware and software to facilitate local AI processing.

II. What is the Market Offering? Current Landscape and Applications

The integration of artificial intelligence directly onto personal devices is no longer a futuristic concept; it is a pervasive reality that is fundamentally reshaping user experiences across a wide array of consumer electronics. The market offering for on-device AI is broad and continues to expand, driven by advancements in hardware and software.

The Ubiquitous Presence: AI in Smartphones and Tablets

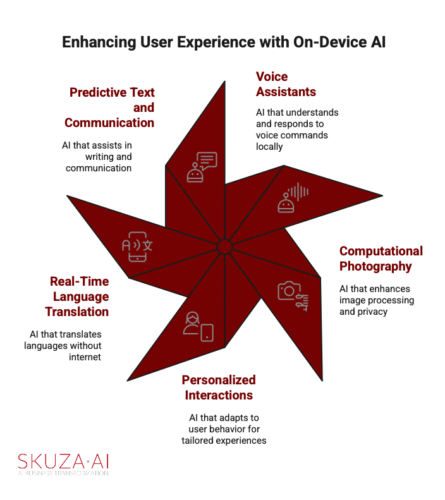

Smartphones and tablets have become primary vehicles for the widespread adoption of on-device AI, with features that enhance daily interactions and tasks. Voice assistants like Apple’s Siri and Google Assistant are prime examples, leveraging local processing to understand commands and provide real-time responses, making interactions more fluid and immediate. This local processing capability allows for faster response times compared to cloud-dependent alternatives, crucial for seamless user experience.

Computational photography stands out as a major area of on-device AI innovation. AI models running locally power features such as face recognition (e.g., Face ID), object detection, portrait mode enhancements, night mode, and intelligent scene recognition. Users can modify images, remove unwanted objects, and intelligently reconstruct photos (e.g., Google’s Magic Editor) directly on their device, significantly minimizing the need to send sensitive image data to the cloud for processing. The ability to perform these complex image manipulations locally ensures privacy and speed.

Furthermore, personalized interactions are profoundly enhanced as on-device AI analyzes user behaviors, unique speech patterns, facial expressions, and preferences to generate more relevant responses and recommendations. This includes personalized app suggestions, adaptive battery management that optimizes power consumption based on usage patterns, and context-aware notifications. This trend signifies a shift from reactive AI, which responds to explicit commands, to proactive and deeply personalized AI experiences, where the device anticipates user needs and adapts to individual styles, making the technology feel more intuitive and anticipatory.

Beyond these, on-device AI enables real-time language translation apps to function even without internet connectivity, proving invaluable for travelers. Predictive text and communication features also benefit from local processing, offering smart keyboard suggestions and assistance with email and message composition. Samsung’s Galaxy AI features, for instance, include “Circle to Search” for instant information retrieval by circling content on screen, and “Writing Assist” for grammar and tone suggestions.

Empowering Productivity: AI in Laptops and PCs

The evolution of laptops into “AI PCs” represents a significant leap in on-device AI capabilities for personal computing. These devices, powered by processors like Intel Core Ultra, integrate a CPU, GPU, and a dedicated Neural Processing Unit (NPU) to deliver enhanced AI performance directly on the device. This architectural design enables a wide range of AI features that boost productivity and creativity.

Users can now leverage AI for creative tasks such as drafting emails, organizing calendars, generating images or music from text prompts, and enhancing photos and videos with minimal effort. AI assistants accelerate writing, coding, and content creation, allowing for faster iteration and improved output quality. For productivity, AI PCs offer features like intelligent battery management, AI-powered background blur, noise suppression, and filters/avatars for video calls, ensuring smooth collaboration with reduced power consumption. In the realm of gaming, AI-powered upscaling enhances visuals and performance, while the integrated NPU offloads AI tasks such as background removal and audio optimization, leading to smoother streaming and gaming experiences.

For businesses, AI PCs offer compelling advantages, including a reduced reliance on costly cloud instances as more workloads can be processed directly on the device. This also enhances data privacy by keeping sensitive information local and enables proactive combat against cyber threats with AI-assisted security technologies. Furthermore, AI PCs streamline IT operations through integrated remote manageability tools and CPU telemetry data. This democratization of advanced AI capabilities, particularly generative AI, signifies that tasks once exclusive to cloud services are now becoming standard on consumer devices, lowering the barrier for individuals and small businesses to utilize powerful AI tools without continuous cloud expenses.

Tablets, such as the Lenovo Yoga Tab Plus, also offer similar productivity enhancements, including AI-powered writing tools for continuous writing and rephrasing, and AI transcription services for meetings, demonstrating the convergence of capabilities across device types.

Beyond the Mainstream: Emerging Use Cases

The reach of on-device AI extends beyond traditional personal computing devices, permeating various aspects of our physical environment. Wearable devices like fitness trackers and smartwatches extensively leverage on-device AI for real-time health monitoring, analyzing vital data such as heart rate, activity levels, blood glucose, and sleep patterns. This immediate, localized analysis provides users and healthcare professionals with instant feedback and actionable insights, enhancing personal health management.

In the smart home domain, devices like security cameras and thermostats utilize on-device AI for tasks such as motion detection, facial recognition, and learning user preferences for optimal energy efficiency. AI-enabled security cameras, for instance, can detect unusual activity and alert homeowners instantly, processing video feeds locally without constant cloud reliance.

The automotive sector is another critical area where on-device AI is indispensable. Tasks such as object detection, lane tracking, navigation, voice assistants, drowsiness detection systems, and predictive maintenance rely heavily on AI models that process data in real-time directly within the vehicle. In scenarios where connectivity may be compromised, such as military applications involving drones or autonomous robots, the autonomy provided by on-device AI for decision-making is critical. These examples illustrate a broader trend where AI is evolving from a mere software application on a screen to an embedded intelligence within our physical surroundings. This emergence of “ambient intelligence” allows devices to understand and react to their immediate environment in real-time, enabling more seamless, context-aware, and integrated interactions with technology.

The following table summarizes key on-device AI applications across various device categories:

Table 2: Key On-Device AI Applications Across Device Categories

| Device Category | Key On-Device AI Applications |

| Smartphones | Voice Assistants (Siri, Google Assistant), Computational Photography (Face ID, Portrait Mode, Night Mode, Object/Scene Recognition, Photo Editing, Magic Editor), Real-time Translation, Predictive Text/Keyboard, Call Screening, Personalized Recommendations, Adaptive Battery Management |

| Tablets | Voice Recognition, Natural Language Processing (NLP), Performance Optimization, Personalized User Experience, Augmented Reality (AR), AI writing tools (summarization, rephrase), AI transcription, Circle to Search |

| Laptops (AI PCs) | AI-Accelerated Creativity (video/photo editing, image/music generation), Productivity Assistance (email drafting, calendar organization, coding), Gaming Upscaling/Optimization, AI-enhanced video calls (background blur, noise suppression), Proactive Security, IT Operations Streamlining |

| Wearables | Health Monitoring (heart rate, activity, sleep, blood glucose), Fitness Tracking, Personalized Health Insights |

| Smart Home Devices | Security Cameras (motion detection, facial recognition), Smart Thermostats (learning preferences, energy efficiency), Voice Control |

| Automotive | Object Detection, Lane Tracking, Navigation, Voice Assistants, Drowsiness Detection, Predictive Maintenance |

III. How Can They Get It? Hardware and Software Ecosystems

The widespread availability and increasing sophistication of on-device AI are fundamentally enabled by a symbiotic relationship between advanced hardware and robust, optimized software. This dual evolution is making powerful AI capabilities accessible directly on personal devices.

The Hardware Revolution: Specialized Processors for AI

The most significant catalyst for on-device AI is the emergence and proliferation of Neural Processing Units (NPUs), also referred to as AI accelerators or deep learning processors. Unlike general-purpose CPUs (Central Processing Units) and GPUs (Graphics Processing Units), NPUs are purpose-built to accelerate the mathematical computations critical for AI and machine learning algorithms, particularly the intensive operations involved in neural networks, such as convolutions and matrix multiplications. Their design prioritizes parallel processing, allowing them to perform millions of calculations simultaneously, and they are significantly more energy-efficient for AI tasks, which is a crucial factor for portable devices with limited battery life.

This “AI-first” hardware design philosophy, where the NPU is a core component rather than an add-on, signals that future devices are being fundamentally architected around AI workloads. This implies a future where AI processing is an intrinsic, always-on capability, enabling new types of applications that are currently infeasible.

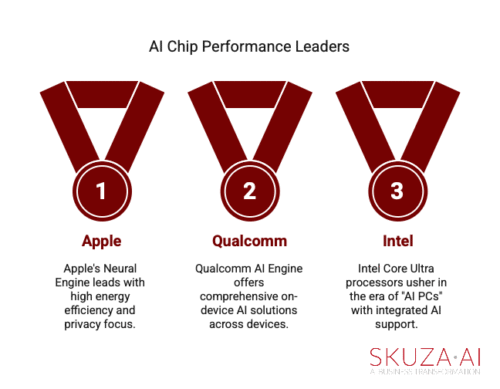

Leading technology companies are at the forefront of this hardware revolution, each developing specialized solutions:

- Apple’s Neural Engine (ANE) is a prominent NPU integrated into all Apple A-series System-on-Chips (SoCs) since the A11 Bionic (2017) and M-series SoCs for Macs (since M1 in 2020). The ANE powers core features like Face ID, Siri, and advanced computational photography capabilities such as Smart HDR, Night Mode, and Deep Fusion. Its high energy efficiency ensures that real-time AI tasks consume minimal battery power, reinforcing privacy by keeping user data secure on the device. The M4 chip, for instance, demonstrates remarkable progress, capable of 38 trillion operations per second (TOPS), a substantial improvement over the M3’s 18 TOPS. Apple is also reportedly developing new AI chips specifically for AirPods and future Apple Watches, with mass production targeted for around 2027.

- Qualcomm AI Engine provides a comprehensive solution for on-device AI, employing heterogeneous computing across its Hexagon NPU, Adreno GPU, Qualcomm CPUs (such as Oryon), and Sensing Hub. This integrated engine accelerates generative AI capabilities on a wide range of devices, including laptops, smartphones, extended reality (XR) devices, IoT devices, and robotics. Snapdragon X Elite processors, for example, offer impressive NPU speeds of up to 45 TOPS. Qualcomm further supports developers with its AI Hub, a platform built on its AI Stack.

- Intel Core Ultra processors are designed to usher in the era of “AI PCs.” These processors integrate CPU, GPU, and NPU to deliver robust AI application support directly on laptops. They enable a variety of AI-enhanced creative tasks, productivity features, and gaming optimizations, reducing the need for cloud reliance for many common AI workloads.

Beyond these major players, other significant technologies and companies contribute to the specialized AI hardware landscape. NVIDIA offers its Jetson AGX Orin for high-end robotics and computer vision, Google’s Coral Dev Board features an Edge TPU for low-power AI acceleration, while AMD’s Xilinx Kria K26 SOM provides adaptive edge AI for vision systems. Hailo-8 is recognized for its high inferencing throughput and extreme power efficiency in power-constrained edge devices. Furthermore, Field-Programmable Gate Arrays (FPGAs) are gaining traction due to their parallel processing capabilities, low power consumption, and adaptability, making them valuable for prototyping and deploying on-device AI, as they can be reprogrammed to optimize performance for specific AI tasks.

Software Foundations: Frameworks and Development Tools

The advancements in AI hardware are meticulously complemented by a robust software ecosystem that streamlines the development and deployment of on-device AI. This ecosystem comprises optimized libraries for on-device inference, platform-specific AI frameworks, and a growing array of open-source applications.

Optimized libraries for on-device inference are crucial for running AI models efficiently on resource-constrained devices.

- TensorFlow Lite (TFLite), developed by Google, is a lightweight version of the popular TensorFlow framework. It is specifically engineered for on-device inference on mobile, embedded, and IoT devices, supporting platforms like Android, iOS, embedded Linux, and microcontrollers. TFLite optimizes trained models using techniques such as quantization, which significantly reduces memory usage and computational cost, making it feasible to deploy machine learning everywhere. With TFLite running on over 4 billion devices, its impact on widespread on-device AI is substantial. The accompanying TensorFlow Lite Model Maker library simplifies the process of training TFLite models with custom datasets, leveraging transfer learning to reduce training data requirements and time.

- Core ML is Apple’s proprietary framework for integrating machine learning models into applications across all its platforms, including iOS, iPadOS, macOS, watchOS, and visionOS. Core ML is optimized for on-device performance by seamlessly leveraging Apple silicon’s CPU, GPU, and Neural Engine, while simultaneously minimizing memory footprint and power consumption. Core ML Tools facilitate the conversion of models from popular machine learning frameworks like TensorFlow or PyTorch into the Core ML format, and the framework now supports advanced generative AI models with sophisticated compression techniques.

- PyTorch Mobile is another framework that enables developers to run PyTorch models directly on mobile devices, thereby facilitating the creation of AI-driven applications.

- MediaPipe Solutions, an open-source framework developed by Google, offers a cross-platform solution for building a wide range of AI applications. This includes capabilities for object detection, image segmentation, and even lightweight Large Language Model (LLM) inference directly on-device.

- LiteRT is a technology focused on efficient AI model execution on edge devices, ensuring smooth and responsive AI model performance on smartphones.

Beyond these foundational libraries, platform-specific AI frameworks are emerging to provide deeper integration and more advanced capabilities:

- Apple’s Foundation Models framework grants developers direct access to Apple’s on-device foundation language model, forming a core component of the “Apple Intelligence” privacy-first initiative. These models are meticulously optimized for low latency and energy efficiency on Apple silicon and are embedded directly into the operating system. This design allows for the creation of powerful, private, and performant features across macOS, iPadOS, iOS, and visionOS without increasing the size of individual applications. Furthermore, Xcode 26, Apple’s flagship integrated development environment, now includes built-in support for ChatGPT and other LLMs, assisting developers with code generation, bug fixing, and test writing.

- Google’s GenAI APIs, integrated as part of ML Kit, provide access to on-device generative capabilities through models like Gemini Nano. This enables features such as offline summarization of voice recordings and enhanced image accessibility for users with low vision. Google AI Studio and Gemini in Android Studio further support developers in prototyping and building AI-powered Android applications.

The growing ecosystem also includes a variety of open-source local AI applications that empower users to run AI models directly on their personal computers. Examples such as Jan AI and Pieces AI offer offline chat functionalities, code assistance, and the ability to run various LLMs locally. These tools are often favored for their privacy-centric approach, ensuring that sensitive data never leaves the user’s device.

IV. What is the Security Improvement? A New Paradigm for Data Protection

The shift to on-device AI represents a profound transformation in data protection, establishing a new paradigm for privacy and security. By fundamentally altering where and how data is processed, on-device AI offers significant improvements over traditional cloud-based models.

Enhanced Data Privacy: The Core Advantage of Local Processing

The most compelling argument for on-device AI lies in its intrinsic ability to enhance data privacy. When sensitive data is processed directly on the user’s device, the necessity of transmitting this information to external cloud servers is either minimized or entirely eliminated. This design choice dramatically reduces the risk of data interception, breaches, or unauthorized access by third parties during transmission or while stored in centralized data centers.

This approach aligns seamlessly with core data protection principles such as confidentiality, data minimization, and storage limitation. In an ideal on-device AI implementation, only one copy of personal data resides on the device itself, significantly altering data protection risks. Users gain direct control over their personal information and processing decisions, which in turn fosters greater trust in AI applications. Practical examples include on-device facial recognition systems like Face ID, voice assistants that process commands locally, and medical diagnosis applications that analyze sensitive health data without ever sending it off the patient’s device. This consistent emphasis on privacy across numerous sources indicates that for on-device AI, privacy is not merely a feature to be added, but a fundamental design principle. Unlike cloud AI, where privacy often relies on policy and encryption during transit or at rest, on-device AI builds privacy into the very architecture by keeping data local. This constitutes a proactive, “privacy-by-design” approach that mitigates risks from the outset, rather than reacting to potential breaches. This shift is particularly critical for applications handling sensitive data and for building user trust, especially given the escalating global concerns about data privacy.

Robust Security Measures at the Edge

Beyond the inherent privacy benefits of data localization, on-device AI significantly contributes to overall system security. It enables real-time threat detection and response by analyzing data locally and immediately, allowing for much quicker mitigation of risks compared to systems that rely on cloud processing. This capability is particularly valuable in high-stakes environments such as critical infrastructure and smart cities.

The reduced attack surface is another substantial advantage; by minimizing the amount of sensitive data transmitted over the internet, on-device AI inherently lessens exposure to cybersecurity threats and reduces compliance risks. The decentralized nature of edge AI deployments further enhances security resilience by reducing single points of failure, making the overall system more robust against attacks.

A significant advancement in on-device AI security is the implementation of hardware-level security, most notably through Trusted Execution Environments (TEEs). A TEE is a secure, isolated area within a computer processor designed to run sensitive code and handle private data separately from the main operating system. This hardware-based isolation protects critical operations from malware or tampering. Within a TEE, sensitive data (e.g., patient records, financial transactions, biometric authentication credentials) is decrypted and processed only within this protected space, ensuring that even if the main operating system is compromised, the confidential information remains secure. For instance, a diagnostic algorithm or a large language model (LLM) can run inside the TEE, where patient data is only decrypted in that protected space, maintaining confidentiality and compliance with healthcare privacy regulations. Similarly, FPGAs can serve as a hardware root of trust (HRoT), securing the boot process and verifying the integrity of AI models. The increasing recognition of the high value of AI workloads has led to a growing focus on securing AI at the silicon level, indicating a crucial shift towards hardware-rooted security that protects AI operations.

Advanced Privacy-Preserving Techniques

Beyond direct data localization and hardware isolation, on-device AI leverages sophisticated privacy-preserving techniques to further strengthen data protection:

- Federated Learning (FL): This innovative approach allows multiple devices to collaboratively train a shared AI model without ever sending raw data to a central server. Instead, each device processes its own data locally and only shares aggregated model updates (e.g., gradients) with a central server, which then combines these updates to improve the global model. This method fundamentally protects personal information from exposure during transmission and storage, making it an ideal solution for sensitive applications like collaborative medical diagnostics across different hospitals without sharing patient records. Google’s Gboard, for example, utilizes federated learning to enhance typing suggestions without collecting users’ private messages. The introduction of federated learning represents an evolution in privacy thinking, enabling collaborative intelligence without compromising individual data, which is critical for scaling AI in privacy-sensitive domains.

- Differential Privacy (DP): This technique involves adding carefully calibrated “noise” to the data or model outputs. This mathematical guarantee ensures that the AI’s output remains essentially the same whether or not a specific individual’s data was included in its input, making it statistically improbable to trace model changes back to specific users while preserving overall model accuracy.

- Homomorphic Encryption (HE): While currently more computationally intensive and less widely implemented on consumer devices, homomorphic encryption allows computations to be performed directly on encrypted data without the need for decryption.This means that AI models could theoretically be trained on encrypted data, with the model never “seeing” the actual plaintext.

- Secure Multi-Party Computation (SMPC): SMPC protocols enable multiple parties to jointly compute a result without revealing their individual inputs. These advanced cryptographic techniques are particularly valuable for securing the aggregation phase in federated learning, ensuring that individual contributions cannot be reverse-engineered.

These advanced techniques, combined with local processing and hardware-level security, create a layered defense for on-device AI.

V. The State of Development: Challenges and Future Outlook

While on-device AI offers compelling advantages and has seen rapid adoption, its development and widespread deployment are not without significant challenges. Understanding these hurdles is crucial for appreciating the current state of the technology and anticipating its future trajectory.

Current Limitations and Hurdles

Despite the rapid advancements, on-device AI faces inherent constraints primarily due to the physical limitations of edge devices.

- Hardware Constraints: Devices like smartphones, tablets, and even laptops possess limited processing power, memory (RAM), and battery life when compared to the vast resources available in cloud servers. Running complex AI models, especially large language models (LLMs), can quickly drain device batteries and lead to overheating. While specialized NPUs significantly alleviate some of these issues, there remains a constant tension between the desired complexity and performance of AI and the practical capabilities of consumer device hardware. For instance, current on-device LLMs are typically compact (e.g., Apple’s 3-billion-parameter model) and optimized for specific text tasks, not for general world knowledge chatbots, reflecting these limitations.

- Model Optimization Complexity: To operate effectively within these hardware constraints, AI models must undergo aggressive optimization. This involves techniques collectively known as model compression, which include quantization (reducing numerical precision, for example, from 32-bit floating-point to more efficient 8-bit integers), pruning (identifying and removing less important neural network weights or connections), knowledge distillation (training a smaller “student” model to replicate the outputs of a larger, more accurate “teacher” model), and low-rank decomposition. While these techniques successfully reduce model size, accelerate inference, and lower memory usage, they can sometimes lead to a degradation in accuracy or generalizability. Achieving the right balance between efficiency and performance requires careful calibration and significant development effort.

- Update Management and Version Control Challenges: A key difference from cloud AI is the difficulty in updating models. Unlike cloud models that can be instantly deployed and updated centrally, on-device AI models typically require updates to be pushed to individual devices, often through app updates or operating system patches. This process can introduce compatibility issues, demand higher user effort, and complicate the task of ensuring consistent model versions across a diverse fleet of devices.This highlights a fundamental trade-off: highly autonomous, locally-run models are harder to update and adapt compared to their cloud counterparts, implying that maintaining their relevance and performance over time requires sophisticated lifecycle management strategies.

- Operational and Integration Complexities: Managing a distributed network of AI-enabled edge devices introduces significant operational hurdles. These include ensuring consistent model performance across varying device types and conditions, continuously monitoring system health, and integrating new AI solutions with existing legacy infrastructure. Environmental factors such as dust, vibration, and temperature variations can also impact device reliability, particularly for AI systems deployed in industrial or outdoor settings.

Addressing Security Vulnerabilities and Adversarial Attacks

While on-device AI inherently enhances privacy by keeping data local, it is not immune to a range of sophisticated security threats, particularly those stemming from Adversarial Machine Learning (AML). AML involves crafting inputs specifically designed to deceive, steal, or exploit AI models. The expanding threat landscape necessitates a multi-layered defense strategy.

Understanding Key Threats:

- Data Poisoning: Attackers can inject incorrect or malicious data into the training dataset, corrupting the AI’s functionality and leading to false choices or predictions.

- Model Inversion: These privacy attacks aim to reconstruct sensitive training data by repeatedly querying the model and analyzing its outputs.This poses a severe privacy threat, potentially leaking personal data, trade secrets, or even revealing whether a model’s outputs infringe on copyright.

- Evasion Attacks: Attackers make small, often imperceptible changes to input data that cause misclassification or misinterpretation by the AI, potentially allowing them to bypass AI-based security systems.

- Model Stealing (Extraction) Attacks:Adversaries can replicate a model’s functionality by repeatedly querying it, effectively stealing the intellectual property embedded in the trained model.

- Privacy Leakage: AI models may inadvertently memorize and leak sensitive information from their training data through specific queries or generated outputs.

- Prompt Injection: Particularly relevant for Large Language Models (LLMs), crafted inputs can manipulate a model’s behavior, causing it to produce harmful or unintended outputs by exploiting its reliance on user prompts.

- Hardware Vulnerabilities: Despite the benefits of local processing, physical tampering or exploitation of underlying hardware flaws can still compromise on-device AI systems.

Mitigation Strategies for On-Device AI:

To counter these threats, a comprehensive and multi-layered defense is essential, combining both software and hardware measures.

- Robust Data Validation: Implementing comprehensive checks to identify and filter malicious or corrupted data is crucial during both the training and inference phases.

- Adversarial Training: Training AI models against known adversarial examples can significantly improve their robustness and resilience to evasion attacks.

- Model Security Enhancements: Restricting API access, implementing rate limits to deter model extraction attempts, and reducing the granularity of model outputs can minimize information leakage.

- Differential Privacy: Applying calibrated noise to data or model updates provides a mathematical guarantee of individual privacy by obscuring specific data points, making it difficult to trace information back to any single source.

- Hardware-Based Protections: The utilization of Trusted Execution Environments (TEEs) is paramount to isolate sensitive computations and prevent unauthorized access, even if the rest of the device is compromised. Secure boot processes and stringent access controls are also vital to protect the integrity of the device and its AI models.

- Continuous Monitoring and Auditing: Regular checks of AI models and systems are necessary to ensure adherence to privacy standards, detect anomalies, and track potential breaches.

- Ethical AI Practices: Adhering to responsible AI principles, ensuring clear consent mechanisms for data usage, and educating users about AI capabilities and limitations are critical for building and maintaining trust.

The array of adversarial attacks demonstrates that localization alone is insufficient for complete security. The threat landscape is expanding, requiring a sophisticated defense strategy that integrates software-level mitigations with hardware-level security. This indicates that securing on-device AI is an ongoing, complex endeavor demanding continuous research, development, and vigilance from manufacturers and developers to maintain trust and reliability.

Advancements and Future Trends

The trajectory of on-device AI is characterized by continuous innovation, propelled by both technological breakthroughs and escalating market demand. The future promises even more powerful, efficient, and integrated AI experiences directly on personal devices.

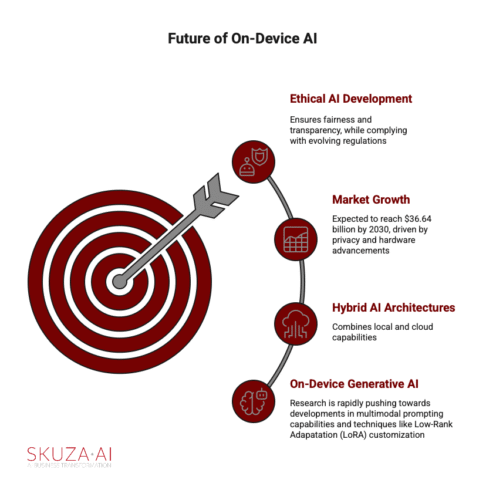

- Next-Generation AI Hardware: The coming years will witness the emergence of even more powerful and energy-efficient AI accelerators. This includes continued advancements in NPUs, with increasing Trillions of Operations Per Second (TOPS) performance, such as Apple’s M4 chip reaching 38 TOPS and Qualcomm’s Snapdragon X Elite processors achieving 45 TOPS. Beyond conventional NPUs, research into neuromorphic computing, which aims to mimic the human brain’s processing mechanisms, and quantum machine learning holds the potential for exponentially faster and more energy-efficient AI computations directly on devices. FPGAs will continue to play a crucial role due to their reconfigurability, enabling flexible prototyping and deployment of tailored AI solutions.

- The Evolution of On-Device Generative AI and LLMs: While current on-device LLMs are typically compact (e.g., Apple’s approximately 3-billion-parameter model) and optimized for specific text tasks like summarization, entity extraction, and content generation, research is rapidly pushing towards more capable on-device generative AI. This includes the development of multimodal prompting capabilities, allowing models to understand and respond to a combination of text and image inputs. Techniques like LoRA (Low-Rank Adaptation) customization are enabling cost-effective fine-tuning of LLMs directly on-device, allowing for personalized model behavior without retraining the entire model. Companies like Arm and Stability AI are actively optimizing models for on-device text-to-audio generation, achieving significant speed increases and enabling high-quality, custom audio clips to be produced entirely offline on smartphones. This indicates a future where more complex generative tasks, traditionally confined to the cloud, become increasingly feasible locally.

- Hybrid AI Architectures: Synergizing Local and Cloud Capabilities: The most pragmatic and likely future for AI is a hybrid approach, intelligently combining the strengths of both on-device and cloud AI. In this model, on-device AI will handle basic tasks, real-time processing, and privacy-sensitive interactions due to its low latency and data localization benefits. Conversely, cloud AI will be leveraged for more complex analysis, large-scale model training, and tasks requiring vast, frequently updated datasets or immense computational power. Apple’s strategy, for instance, involves an on-device foundation model complemented by a mixture-of-experts server-based model for Private Cloud Compute, exemplifying this balanced approach. This intelligent synergy allows for optimal performance, privacy, and scalability across the entire AI ecosystem.

- The Growing Market and Ethical Considerations: The global on-device AI market is projected for substantial growth, from an estimated USD 8.60 billion in 2024 to USD 36.64 billion by 2030, driven by escalating privacy concerns, the demand for real-time processing, and continuous hardware advancements. As AI becomes more ubiquitous on devices, there will be an increasing focus on Explainable AI (XAI) to foster transparency and trust in AI decision-making. Furthermore, ethical AI development will remain a critical concern, addressing biases, ensuring fairness, and complying with evolving regulations to build deeply personal products that represent users authentically and avoid perpetuating stereotypes.

The future outlook reveals a powerful feedback loop between technological innovation and market demand. Advancements in hardware, such as more powerful NPUs and the exploration of neuromorphic computing, enable more complex on-device AI capabilities, including advanced generative AI and LLMs. This, in turn, fuels user demand for more intelligent, personalized, and private device experiences. This increased demand then drives further investment in hardware and software optimization, creating a continuous, rapid evolution of the on-device AI landscape and consistently pushing the boundaries of what is possible on personal devices.

VI. Conclusion: The Ubiquitous and Secure Future of AI

On-device artificial intelligence marks a pivotal shift in the broader AI landscape, fundamentally altering how intelligent systems are deployed and consumed. This transformation moves significant processing power from distant cloud servers directly to personal devices, including laptops, tablets, and smartphones. This foundational change delivers unparalleled benefits, notably ultra-low latency, which is critical for real-time responsiveness and immediate decision-making in a multitude of applications.Crucially, it profoundly enhances user privacy by keeping sensitive data localized, thereby minimizing transmission risks and empowering users with greater control over their personal information. Furthermore, on-device AI contributes significantly to energy efficiency, reducing the substantial computational and environmental footprint historically associated with large-scale cloud operations.

The market is already rich with offerings, showcasing the pervasive integration of on-device AI. From intelligent camera features and highly responsive voice assistants on smartphones to productivity-enhancing AI tools on laptops, these capabilities are powered by specialized hardware like Neural Processing Units (NPUs) and supported by robust software frameworks. This widespread adoption is not merely an incremental improvement but a fundamental redefinition of how users interact with their technology, making it more intuitive, personalized, and seamlessly integrated into daily life, even in offline environments.

Concurrently, on-device AI is redefining data security paradigms, establishing privacy as a core design principle rather than an afterthought. The implementation of advanced techniques such as federated learning, which enables collaborative model training without centralizing raw data, and differential privacy, which mathematically guarantees individual anonymity, are building a robust foundation for secure and confidential AI interactions. Moreover, the increasing reliance on hardware-level security measures, particularly Trusted Execution Environments (TEEs), ensures that sensitive computations are isolated and protected at the deepest layers of the computing stack, providing resilience against sophisticated cyber threats.

While challenges persist in balancing hardware constraints with the demands of increasingly complex models, optimizing performance, and managing updates across diverse devices, the trajectory of on-device AI is clear. The industry is rapidly progressing towards more powerful and efficient AI chips, sophisticated model compression techniques, and the widespread adoption of hybrid AI architectures that intelligently leverage both local and cloud capabilities. This ongoing innovation, coupled with a growing focus on ethical AI development and explainability, points towards a future where intelligent agents are not just in our pockets, but deeply embedded in the very fabric of our personal computing experience, making AI truly ubiquitous, performant, and secure.

Based on references:

- semiconductor.samsung.com, accessed June 14, 2025, https://semiconductor.samsung.com/technologies/processor/on-device-ai/#:~:text=On%2Ddevice%20AI%20is%20what,retrieval%2C%20which%20leads%20to%20latency.

- On-device artificial intelligence | European Data Protection Supervisor, accessed June 14, 2025, https://www.edps.europa.eu/data-protection/technology-monitoring/techsonar/device-artificial-intelligence_en

- What Is Edge AI? | IBM, accessed June 14, 2025, https://www.ibm.com/think/topics/edge-ai

- How Does AI Work Without Internet? – BytePlus, accessed June 14, 2025, https://www.byteplus.com/en/topic/376555

- On-Device AI: Powering the Future of Computing | Coursera, accessed June 14, 2025, https://www.coursera.org/articles/on-device-ai

- On-Device AI: The Next Evolution in Mobile Apps – Socialnomics, accessed June 14, 2025, https://socialnomics.net/2025/03/27/on-device-ai-the-next-evolution-in-mobile-apps/

- On-Device AI: Building Smarter, Faster, And Private Applications – Smashing Magazine, accessed June 14, 2025, https://www.smashingmagazine.com/2025/01/on-device-ai-building-smarter-faster-private-applications/

- On-Device AI: How Google Is Boosting App Trust, Privacy & UX | InspiringApps, accessed June 14, 2025, https://www.inspiringapps.com/blog/google-on-device-ai-app-trust-privacy-ux

- The Power of Edge AI Deployment: Enhancing Security and Performance, accessed June 14, 2025, https://cloudsecurityweb.com/articles/2025/04/08/the-power-of-edge-ai-deployment-enhancing-security-and-performance/

- Powering the Future of On-Device AI with FPGAs – Embedded, accessed June 14, 2025, https://www.embedded.com/powering-the-future-of-on-device-ai-with-fpgas/

- On-Device AI vs. Cloud AI: Unlock Peak Performance for Your App – Appbirds Technologies, accessed June 14, 2025, https://appbirds.co/on-device-ai-vs-cloud-ai-best-for-your-app/

- Federated Learning: A Privacy-Preserving Approach to … – Netguru, accessed June 14, 2025, https://www.netguru.com/blog/federated-learning

- Moving AI to the edge: Benefits, challenges and solutions – Red Hat, accessed June 14, 2025, https://www.redhat.com/en/blog/moving-ai-edge-benefits-challenges-and-solutions

- What are the challenges of implementing edge AI? – Milvus, accessed June 14, 2025, https://milvus.io/ai-quick-reference/what-are-the-challenges-of-implementing-edge-ai

- Overlooked: Big Privacy Risk in AI-Enabled Devices – Reddit, accessed June 14, 2025, https://www.reddit.com/r/privacy/comments/1j7cb70/overlooked_big_privacy_risk_in_aienabled_devices/

- www.n-ix.com, accessed June 14, 2025, https://www.n-ix.com/on-device-ai/#:~:text=Enhanced%20privacy%20and%20security&text=On%2Ddevice%20AI%20enhances%20data,risk%20of%20exposing%20sensitive%20data.

- How is data privacy handled in edge AI systems? – Milvus, accessed June 14, 2025, https://milvus.io/ai-quick-reference/how-is-data-privacy-handled-in-edge-ai-systems

- What You Need to Know About Offline AI Resources – Arsturn, accessed June 14, 2025, https://www.arsturn.com/blog/what-you-need-to-know-about-offline-ai-resources

- Secure AI Development for On-Device Applications: A Privacy-First Approach – CMARIX, accessed June 14, 2025, https://www.cmarix.com/blog/combine-on-device-ai-secure-development-privacy-first-solutions/

- Making the Most of Edge AI in the Security Industry, accessed June 14, 2025, https://www.securityindustry.org/2025/04/04/making-the-most-of-edge-ai-in-the-security-industry/

- How to Enable AI on Phone – BytePlus, accessed June 14, 2025, https://www.byteplus.com/en/topic/497471

- I tried Sanctum’s local AI app, and it’s exactly what I needed to keep my data private | ZDNET, accessed June 14, 2025, https://www.zdnet.com/article/i-tried-sanctums-local-ai-app-and-its-exactly-what-i-needed-to-keep-my-data-private/

- If you’re holding AI on NAS, what matters more to you, privacy or efficiency? – Reddit, accessed June 14, 2025, https://www.reddit.com/r/selfhosted/comments/1l9htx5/if_youre_holding_ai_on_nas_what_matters_more_to/

- Edge AI: Energy – EPSRC National Edge Artificial Intelligence Hub, accessed June 14, 2025, https://edgeaihub.co.uk/edgeai-energy/

- How Edge Computing Can Solve AI’s Energy Crisis | Built In, accessed June 14, 2025, https://builtin.com/artificial-intelligence/edge-ai-energy-solution

- How on-device AI can help us cut AI’s energy demand – The World Economic Forum, accessed June 14, 2025, https://www.weforum.org/stories/2025/03/on-device-ai-energy-system-chatgpt-grok-deepx/

- 4 important ways AI devices strengthen IT operations and security – The SHI Resource Hub, accessed June 14, 2025, https://blog.shi.com/cybersecurity/ai-operations-cybersecurity/

- Updates to Apple’s On-Device and Server Foundation Language …, accessed June 14, 2025, https://machinelearning.apple.com/research/apple-foundation-models-2025-updates

- 6 Ways to Preserve Privacy in AI – Privacera, accessed June 14, 2025, https://privacera.com/blog/6-ways-to-preserve-privacy-in-artificial-intelligence/

- What are Trusted Execution Environments (TEE)? | AI21 – AI21 Labs, accessed June 14, 2025, https://www.ai21.com/glossary/trusted-execution-environments/

- What is the Future of Machine Learning? – Mission Cloud Services, accessed June 14, 2025, https://www.missioncloud.com/blog/what-is-the-future-of-machine-learning

- Introduction | The 2025 Edge AI Technology Report – Wevolver, accessed June 14, 2025, https://www.wevolver.com/article/2025-edge-ai-technology-report/null

- Understanding basic principles of artificial intelligence: a practical guide for intensivists, accessed June 14, 2025, https://pmc.ncbi.nlm.nih.gov/articles/PMC9686179/

- NPU: How your smartphone mimics the art of thinking – IT professional – ITpedia, accessed June 14, 2025, https://en.itpedia.nl/2025/01/17/npu-hoe-je-smartphone-de-kunst-van-het-denken-nabootst/

- System Requirements for Artificial Intelligence in 2025 – ProX PC, accessed June 14, 2025, https://www.proxpc.com/blogs/system-requirements-for-artificial-intelligence-in-2025

- AI Hardware Requirements: A Comprehensive Guide – Cherry Servers, accessed June 14, 2025, https://www.cherryservers.com/blog/ai-hardware-requirements

- TensorFlow Lite – Computer Vision on Edge Devices [2024 Guide] – viso.ai, accessed June 14, 2025, https://viso.ai/edge-ai/tensorflow-lite/

- 5 Ways to Reduce Latency in Event-Driven AI Systems – Ghost, accessed June 14, 2025, https://latitude-blog.ghost.io/blog/5-ways-to-reduce-latency-in-event-driven-ai-systems/

- Comparing Modern Tablets with AI features: How to Make a Choice – Tech Transformation, accessed June 14, 2025, https://tech-transformation.com/hardware/comparing-modern-tablets-with-ai-features-how-to-make-a-choice/

- Phones with On-Device AI: Top Picks & Features – BytePlus, accessed June 14, 2025, https://www.byteplus.com/en/topic/497470

- Top 10 Apps Using Machine Learning in 2025 – GeeksforGeeks, accessed June 14, 2025, https://www.geeksforgeeks.org/top-apps-using-machine-learning/

- Neural Engine – Wikipedia, accessed June 14, 2025, https://en.wikipedia.org/wiki/Neural_Engine

- AI Model Compression Techniques – Sogeti Labs, accessed June 14, 2025, https://labs.sogeti.com/ai-model-compression-techniques/

- Model Inversion Attacks: A Growing Threat to AI Security, accessed June 14, 2025, https://www.tillion.ai/blog/model-inversion-attacks-a-growing-threat-to-ai-security

- Use features with Galaxy AI on your Galaxy phone and tablet – Samsung, accessed June 14, 2025, https://www.samsung.com/us/support/answer/ANS10000753/

- AI on Android | Android Developers, accessed June 14, 2025, https://developer.android.com/ai

- How does federated learning enhance privacy? – Milvus, accessed June 14, 2025, https://milvus.io/ai-quick-reference/how-does-federated-learning-enhance-privacy

- On-device AI Market Size And Share | Industry Report, 2030, accessed June 14, 2025, https://www.grandviewresearch.com/industry-analysis/on-device-ai-market-report

- Lenovo Yoga Tab Plus | Premium AI-Powered Tablet, accessed June 14, 2025, https://www.lenovo.com/us/en/p/tablets/android-tablets/lenovo-tab-series/lenovo-yoga-tab-plus/len103y0001

- The AI PC Powered by Intel. Now AI Is for Everyone – Intel, accessed June 14, 2025, https://www.intel.com/content/www/us/en/ai-pc/overview.html

- Eight New Edge AI Use Cases for Telecom Growth – Dell, accessed June 14, 2025, https://www.dell.com/en-us/blog/eight-new-edge-ai-use-cases-for-telecom-growth/

- Top 10 Edge AI Hardware for 2025 – Jaycon | Product Design, PCB & Injection Molding, accessed June 14, 2025, https://www.jaycon.com/top-10-edge-ai-hardware-for-2025/

- Neural processing unit – Wikipedia, accessed June 14, 2025, https://en.wikipedia.org/wiki/Neural_processing_unit

- NPU AI Engine – Qualcomm, accessed June 14, 2025, https://www.qualcomm.com/products/technology/processors/ai-engine

- LLM Inference guide for Android | Google AI Edge – Gemini API, accessed June 14, 2025, https://ai.google.dev/edge/mediapipe/solutions/genai/llm_inference/android

- Everything we actually know about the Apple Neural Engine (ANE) – GitHub, accessed June 14, 2025, https://github.com/hollance/neural-engine

- Apple’s Next Hardware Revolution: AI Chips for Mobile & Networking Devices, accessed June 14, 2025, https://www.techrepublic.com/article/news-apple-ai-chips-macs-smartwatches-servers/

- Qualcomm AI Engine Backend — ExecuTorch 0.6 documentation, accessed June 14, 2025, https://docs.pytorch.org/executorch/stable/build-run-qualcomm-ai-engine-direct-backend.html

- TensorFlow Lite Model Maker | Google AI Edge – Gemini API, accessed June 14, 2025, https://ai.google.dev/edge/litert/libraries/modify

- Core ML – Machine Learning – Apple Developer, accessed June 14, 2025, https://developer.apple.com/machine-learning/core-ml/

- What is Core ML? – Emerge Tools, accessed June 14, 2025, https://www.emergetools.com/glossary/core-ml

- Galaxy AI | Mobile AI and AI Features on Devices | Samsung Caribbean, accessed June 14, 2025, https://www.samsung.com/latin_en/galaxy-ai/

- Apple Launches On-Device AI Framework, LLM Tools, and OS Redesign for Developers, accessed June 14, 2025, https://adtmag.com/articles/2025/06/10/apple-launches-ondevice-ai-framework-and-tools.aspx

- Code-along: Bring on-device AI to your app using the Foundation Models framework – WWDC25 – Videos – Apple Developer, accessed June 14, 2025, https://developer.apple.com/videos/play/wwdc2025/259/

- Adversarial AI: Understanding and Mitigating the Threat – Sysdig, accessed June 14, 2025, https://sysdig.com/learn-cloud-native/adversarial-ai-understanding-and-mitigating-the-threat/

- Which Local AI App Is Best? – Questions – Privacy Guides Community, accessed June 14, 2025, https://discuss.privacyguides.net/t/which-local-ai-app-is-best/24432

- 6 Key Adversarial Attacks and Their Consequences – MindGard AI, accessed June 14, 2025, https://mindgard.ai/blog/ai-under-attack-six-key-adversarial-attacks-and-their-consequences

- Process to develop an AI strategy – Cloud Adoption Framework | Microsoft Learn, accessed June 14, 2025, https://learn.microsoft.com/en-us/azure/cloud-adoption-framework/scenarios/ai/strategy

- AI Must Be Secured at the Silicon Level – Design And Reuse, accessed June 14, 2025, https://www.design-reuse.com/news/13532-ai-must-be-secured-at-the-silicon-level/

- Trusted Execution Environment (TEE) – Learn Microsoft, accessed June 14, 2025, https://learn.microsoft.com/en-us/azure/confidential-computing/trusted-execution-environment