Introduction: The Imperative of AI Governance for C-Level Leaders

Acknowledging Executive Concerns about AI’s Risks and the Growing Regulatory Complexity

The rapid proliferation of AI technologies has undeniably captured the attention of C-level executives across industries. While many recognize AI’s transformative potential, a significant segment harbors legitimate concerns regarding its inherent risks. These apprehensions are not abstract; they are grounded in tangible business threats, including the potential for AI errors and “hallucinations,” an increased likelihood of privacy violations or data breaches, and substantial liability or legal accountability in cases of AI misuse. Such risks directly impact an organization’s business continuity, operational efficiency, and, crucially, its brand reputation.

A notable disparity exists between the confidence C-suite executives express in their AI systems and the actual maturity of their organizations’ governance controls. Despite a high rate of AI integration and scaling across initiatives—reported by 72% of executives—only a third of companies have implemented proper protocols to adhere to responsible AI principles. This gap represents a critical vulnerability that regulatory frameworks are designed to address. The apprehension some executives express toward AI is often a rational response to these quantifiable business risks. Therefore, this report frames AI regulation not as an additional burden, but as a strategic tool for risk mitigation and business protection, directly addressing core concerns about liability, financial penalties, and data security. Understanding these regulatory nuances can transform AI from a perceived threat into a responsibly managed asset.

Setting the Stage: Why Understanding Global AI Regulation is Crucial for Strategic Decision-Making

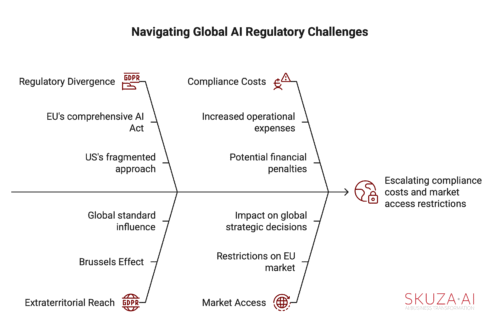

For global enterprises, the philosophical and practical divergence between the European Union’s comprehensive AI Act and the United States’ fragmented approach presents a formidable challenge. This divergence can lead to escalating compliance costs, significant operational complexities, and potential restrictions on market access. The implications extend far beyond regional borders.

The EU AI Act, much like its predecessor, the General Data Protection Regulation (GDPR), possesses significant extraterritorial reach, often referred to as the “Brussels Effect.” This means the Act applies to all organizations that operate, either directly or indirectly, within the boundaries of the European Union. This extraterritorial application transforms a regional law into a de facto global standard for many multinational businesses. Consequently, C-level executives, irrespective of their company’s primary domicile, must develop a sophisticated understanding of EU regulations. Failure to comply can directly impede global market access and incur severe financial penalties, making this a critical consideration for any global strategic decision-making.

The European Union’s Comprehensive AI Act: A Risk-Based Blueprint

The European Union has positioned itself as a global leader in AI regulation with the formal adoption of the EU AI Act in June 2024. This sweeping legislation aims to create a legal framework for trustworthy AI, promoting innovation while meticulously mitigating risks to human rights, safety, and democratic values.

A Tiered Approach to Risk: Unacceptable, High, Limited, and Minimal Risk AI Systems

The EU AI Act is founded upon a meticulously tiered approach to AI regulation, classifying systems based on the level of risk they pose with its use. This structured framework dictates the varying compliance requirements and obligations for AI system providers and deployers.

At the most stringent level are Unacceptable Risk AI systems. These are outright banned due to their clear threat to human safety, livelihoods, and fundamental rights. Prohibited practices include harmful AI-based manipulation and deception, harmful exploitation of vulnerabilities, social scoring, individual criminal offense risk assessment or prediction, untargeted scraping of internet or CCTV material to create or expand facial recognition databases, emotion recognition in workplaces and educational institutions, biometric categorization to deduce protected characteristics, and real-time remote biometric identification for law enforcement purposes in publicly accessible spaces. The EU’s explicit ban on these systems signifies a strong ethical and societal commitment, prioritizing fundamental human rights over unfettered technological development in certain sensitive areas. This proactive and preventative regulatory stance stands in stark contrast to the United States’ approach, which typically relies on existing laws to address harms after they manifest.

Next are High-Risk AI systems, encompassing a variety of AI use cases that can pose “serious risks” to health, safety, or fundamental rights. Examples span critical sectors:

- AI safety components in critical infrastructures (e.g., transport),

- AI solutions used in education that may determine access to education or professional life (e.g., scoring of exams),

- AI-based safety components of products (e.g., AI applications in robot-assisted surgery),

- AI tools for employment and worker management (e.g., CV-sorting software for recruitment),

- Certain AI use cases utilized to give access to essential private and public services (e.g., credit scoring, denying citizens the opportunity to obtain a loan),

- AI systems used for remote biometric identification, emotion recognition, and biometric categorization,

- AI use cases in law enforcement that may interfere with people’s fundamental rights (e.g., evaluation of the reliability of evidence),

- AI use cases in migration, asylum, and border control management (e.g., automated examination of visa applications),

- And AI solutions used in the administration of justice and democratic processes (e.g., AI solutions to prepare court rulings).

Limited Risk AI systems are subject to specific transparency obligations to ensure trust. For instance, humans must be informed when interacting with AI systems such as chatbots, and providers of generative AI must ensure that AI-generated content (e.g., deepfakes, or text published with the purpose to inform the public on matters of public interest) is clearly identifiable and labeled.

Finally, Minimal Risk AI covers AI uses that pose lower levels of risk. The Act provides criteria for exemption, such as performing narrow procedural tasks, making improvements to the results of previously completed human activities, or detecting decision-making patterns or deviations from prior decision-making patterns without replacing or influencing human assessments. These systems face minimal, if any, specific obligations under the Act.

Table 1: EU AI Act Risk Categories and Core Obligations

| Risk Category | Definition/Examples | Key Obligations (Providers & Deployers) |

| Unacceptable | Clear threat to safety, livelihoods, rights (e.g., social scoring, real-time public biometric identification, harmful manipulation) | Banned |

| High | Serious risks to health, safety, fundamental rights (e.g., AI in critical infrastructure, medical devices, employment tools, credit scoring, law enforcement) | Providers: Comprehensive risk/quality management, data governance, technical documentation, logging, human oversight, accuracy, robustness, cybersecurity, conformity assessment, CE marking, registration, corrective actions. Deployers: Use per instructions, relevant input data, monitoring, logging, inform workers/users, registration, cooperate with authorities |

| Limited | Need for transparency (e.g., chatbots, deepfakes, AI-generated public interest text) | Specific disclosure obligations (inform users of AI interaction), clear labeling of AI-generated content |

| Minimal | Lower risk (e.g., spam filters, simple games, narrow procedural tasks, improvements to human activities) | Few to no specific obligations beyond general safety standards |

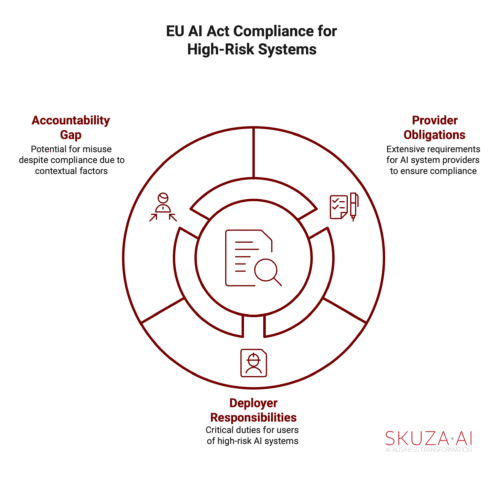

Stringent Obligations for High-Risk AI: Requirements for Design, Development, and Deployment

The EU AI Act places the most rigorous compliance demands on High-Risk AI systems. For providers of such systems, the obligations are extensive and apply before the systems can be placed on the market. These include establishing a comprehensive risk management system, a quality management system, and robust data governance and management processes. Providers are mandated to maintain technical documentation that demonstrates compliance with all relevant requirements, keep meticulous records, including the automatic logging of events, and share essential information with deployers to ensure the proper use of the AI system. Furthermore, providers must ensure appropriate human oversight, achieve a high level of accuracy, robustness, and cybersecurity, undergo a rigorous conformity assessment process, obtain an EU declaration of conformity, and affix the CE marking to their systems. Registration in an EU or national database is also required, along with implementing corrective actions if non-compliance is identified.

While providers bear the primary burden, deployers (users) of high-risk AI systems also have critical duties. They must use the system in accordance with the provider’s instructions for use, ensure that input data is relevant and sufficiently representative for the intended purpose, monitor the system’s operation, and maintain automatically generated logs. Employers, as deployers, must inform affected workers and their representatives about the use of high-risk AI systems. It is important to note that civil society organizations have observed that the Act imposes “minimal obligations on users” beyond these direct requirements. Deployers are not explicitly obligated to undertake further measures to analyze potential impacts on fundamental rights, equality, or accessibility, nor to consult with affected groups or actively mitigate potential harms beyond following provider instructions. This creates a potential accountability gap within the EU AI Act. While a provider might ensure the AI system itself is technically compliant, the specific context of its deployment by a user could still lead to fundamental rights violations. For example, a facial authentication system might meet all technical requirements of the Act, yet its deployment in a specific shopping center could still compromise data protection and non-discrimination law, leading to disproportionate surveillance. This suggests that liability for contextual misuse might fall back on existing data protection or human rights laws, rather than being fully addressed by the AI Act’s framework.

Transparency and Accountability: Mandates for General-Purpose AI (GPAI) and Specific Use Cases

Transparency is a cornerstone of the EU AI Act, requiring clear disclosures for various AI applications. Providers of General-Purpose AI (GPAI) models, such as generative AI, must disclose training data sources and methodologies to ensure their outputs are traceable and explainable. This proactive emphasis on transparency for GPAI models, particularly the requirement to disclose training data and methodologies, represents a strategic attempt to address the inherent “black box” problem of complex AI systems. This move is designed to build public trust and enable more effective oversight. Given the EU’s market influence, this approach could effectively set a global precedent for foundational AI models, compelling developers worldwide to adopt similar transparency practices to access the European market.

Specific AI-generated content, including deepfakes and text published for public interest, must be clearly and visibly labeled as AI-generated. For high-risk AI systems, transparency obligations extend to clearly identifying the provider, specifying the system’s intended purpose, its accuracy, robustness, cybersecurity measures, and associated risks. This comprehensive transparency aims to mitigate risks and protect fundamental rights.

The Act attempts to balance these transparency requirements with the protection of trade secrets and proprietary information. Companies are permitted to share only strictly necessary information with regulators under strict confidentiality clauses and to provide general explanations of system logic without exposing all intricate technical details. Contractual protections, such as non-disclosure agreements (NDAs), can also be used when dealing with users and third parties to limit detailed information disclosure.

Enforcement and Penalties: High Fines and the “Brussels Effect” of Extraterritorial Reach

Non-compliance with the EU AI Act carries substantial financial penalties, designed to deter violations and ensure adherence. The maximum fines can reach €40 million or 7% of a company’s annual global turnover for prohibited AI use, €20 million or 4% for data or transparency-related breaches, and €15 million or 3% for other obligations. Providing incorrect information to authorities can also result in significant fines, up to €7.5 million or 1% of annual turnover. These penalties are among the highest globally for AI regulation, underscoring the EU’s commitment to enforcement.

A key feature of the EU AI Act, and a critical strategic consideration for global enterprises, is its extraterritorial scope, often referred to as the “Brussels Effect.” Similar to the GDPR, the Act applies to all organizations that operate, either directly or indirectly, within the boundaries of the European Union, irrespective of their headquarters’ geographical location. This means that any company, whether based in the US, Asia, or elsewhere, that offers AI systems or services to EU customers, or whose AI systems process data related to EU citizens, must comply with the Act. This compels global companies to align their AI development and deployment practices with EU standards to access the lucrative European market. The combination of substantial financial penalties and the Act’s extraterritorial reach elevates AI compliance from a mere legal formality to a critical strategic imperative for global businesses. Non-compliance poses not only severe financial risks but also directly impacts market access and competitive positioning, making proactive adherence essential for long-term viability.

The United States’ Evolving AI Landscape: A Fragmented Ecosystem

In stark contrast to the European Union’s unified and comprehensive approach, the United States’ AI regulatory landscape is best characterized as a dynamic and fragmented ecosystem. This decentralized model relies heavily on existing laws, executive guidance, and a growing patchwork of state-level regulations.

A Decentralized and Sector-Specific Approach: Reliance on Existing Laws and Voluntary Frameworks

The United States’ approach to AI regulation is a “patchwork” system, highly decentralized across various federal agencies and individual states. Unlike the EU’s single, comprehensive AI Act, the US primarily relies on adapting existing laws and issuing voluntary guidelines rather than enacting bespoke AI-specific legislation at the federal level. This strategy aims to balance public safety and civil rights concerns with a widespread assumption that US technology companies must be allowed to innovate freely for the country to maintain its global competitive edge. While this fragmented approach may be perceived as “pro-innovation” by avoiding a single, potentially burdensome federal framework, it simultaneously creates significant complexity, uncertainty, and increased compliance costs for businesses operating nationwide. This requires a highly agile and multi-layered compliance strategy, as companies must navigate diverse and sometimes conflicting requirements across various jurisdictions.

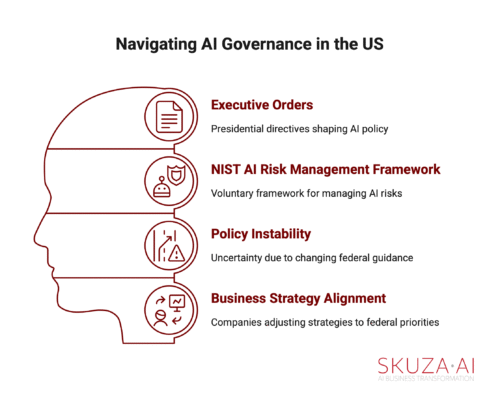

Federal Initiatives: The Role of Executive Orders and the NIST AI Risk Management Framework (AI RMF)

The federal government’s stance on AI governance has demonstrated fluidity, particularly with changes in administration. President Biden’s Executive Order 14110, issued in October 2023, focused on the safe, secure, and trustworthy development and use of AI, aiming to mitigate societal harms such as fraud, discrimination, bias, and disinformation. However, this Executive Order was notably rescinded on January 20, 2025.

President Trump’s subsequent Executive Order 14179, issued in January 2025, explicitly emphasizes “removing barriers to American Leadership in Artificial Intelligence,” promoting a “pro-innovation” and “pro-business AI ecosystem”. Complementing this, the White House Office of Management and Budget (OMB) issued new AI guidelines in April 2025 for federal AI use and procurement, signaling a policy shift towards a “forward-leaning, pro-innovation, and pro-competition mindset”. This rapid succession and philosophical divergence of federal executive guidance create significant policy instability and uncertainty for businesses. This compels companies to constantly re-evaluate their strategic alignment with federal priorities, even when these directives are largely voluntary or focused on government procurement, adding a layer of unpredictability to long-term AI strategy.

In parallel, the National Institute of Standards and Technology (NIST) has developed the NIST AI Risk Management Framework (AI RMF). This is a voluntary framework intended to improve the management of risks associated with AI. It aims to foster trustworthiness, encompassing characteristics such as accuracy, explainability, reliability, privacy, robustness, safety, security, and mitigation of unintended bias. The AI RMF is designed to be consensus-driven, adaptable, and uses plain language, making it accessible to a broad audience, including senior executives. Its core components—Govern, Map, Measure, and Control—provide a systematic approach to risk management. Notably, substantial compliance with the NIST AI RMF can serve as an affirmative defense against enforcement actions in some state laws, such as Texas’s Responsible Artificial Intelligence Governance Act (TRAIGA). This highlights the growing importance of voluntary frameworks in the absence of comprehensive federal legislation.

The Patchwork of State Laws: Key State-Level Regulations

In the absence of comprehensive federal AI legislation, individual states have become increasingly active in regulating AI, leading to a complex and often conflicting “patchwork” of diverse laws. This trend is significant, with over 250 health AI-related bills introduced across 34 states in a single year, and 45 states introducing AI bills in 2024. The failure of a proposed federal AI moratorium on state regulation means that this state-level “patchwork” will continue to grow and diversify. This forces businesses to manage highly granular, jurisdiction-specific compliance requirements, significantly increasing operational complexity, legal costs, and the risk of non-compliance across their diverse operations.

Key state-level regulations include:

- Colorado’s SB 205 (effective February 2026): This landmark legislation is poised to create one of the most detailed state-level AI regulatory schemes in the country. It imposes significant obligations on businesses using “high-risk” AI systems, including those in employment. Key requirements include conducting impact assessments, disclosing AI use to individuals, and taking steps to mitigate discrimination.

- Texas Responsible Artificial Intelligence Governance Act (TRAIGA, effective January 2026): TRAIGA takes a unique, “pro-business” approach, distinguishing itself from the EU and Colorado by not categorizing AI systems by risk level and having narrow transparency requirements. Its most restrictive provisions are largely limited to state government entities and healthcare providers, imposing minimal burdens on most private-sector organizations outside of specific prohibitions against unlawful discrimination or the creation of child sexual abuse material (CSAM). TRAIGA includes a 60-day notice-and-cure period for violations and exclusively civil penalties, not criminal.

- New York City’s Local Law 144 (effective July 2023): This pioneering local law regulates automated employment decision tools (AEDTs) used for hiring or promotion decisions, requiring annual independent bias audits and notification to candidates and employees.

- Illinois Artificial Intelligence Video Interview Act (effective January 2020): This law requires employers using AI to analyze recorded video interviews of job applicants to notify candidates, obtain consent, and share how the technology works.

- California: Regulators are actively adopting new procedural rules to enforce anti-discrimination laws as they apply to automated decision systems (ADS), with potential effectiveness as early as October 2025. California has also led efforts to regulate AI-generated political misinformation.

Agency-Specific Enforcement: How Federal Agencies Apply Existing Mandates to AI

In the absence of a comprehensive federal AI law, various federal agencies are actively applying existing, broad legal frameworks to AI, creating a highly complex and often unpredictable enforcement environment. Businesses face potential liability under multiple, often overlapping, statutes, demanding sophisticated legal interpretation and proactive risk management across diverse operational domains.

- Federal Trade Commission (FTC): The FTC leverages its authority under Section 5 of the FTC Act to police “unfair or deceptive acts or practices” in the context of AI. This includes scrutinizing exaggerated performance claims about AI-powered products, falsely labeling products as AI-driven, opaque data practices (especially involving biometric or personal data), and bias or discrimination in AI decision-making systems. The FTC has taken enforcement actions against companies for deceptive AI claims, such as Workado and Cleo AI, and for failing to prevent consumer harm from AI misuse, as seen in the case of Rite Aid’s facial recognition technology.

- Department of Justice (DOJ): The DOJ has signaled a tough stance on crimes involving the misuse of AI, asserting that existing laws are applicable. Deputy Attorney General Monaco explicitly stated: “Discrimination using AI is still discrimination; Price fixing using AI is still price fixing; Identity theft using AI is still identity theft”. The DOJ intends to seek tougher sentences for offenses “made significantly more dangerous” by AI. The Civil Rights Division specifically focuses on issues at the intersection of AI and civil rights, issuing guidance on disability discrimination in hiring and addressing algorithmic bias in housing. They also utilize the False Claims Act to target civil rights violations by recipients of federal funds, including those involving “racist preferences, policies, programs, and activities, including through… DEI programs”.

- Department of Health and Human Services (HHS) / Office for Civil Rights (OCR): HHS, through its OCR, has issued guidance on the use of AI in healthcare, emphasizing nondiscrimination under Section 1557 of the Affordable Care Act and compliance with HIPAA. OCR requires regulated entities to make reasonable efforts to identify and mitigate risks of unlawful discrimination from AI tools that rely on protected characteristics as input variables. HIPAA applies comprehensively to AI systems processing Protected Health Information (PHI), mandating adherence to the Security Rule, the Minimum Necessary Standard for data access, de-identification requirements, AI-specific risk assessment and lifecycle management, patch management for AI vulnerabilities, and robust Business Associate Agreements (BAAs) with AI vendors. Human oversight of AI decisions is strongly recommended.

- Consumer Financial Protection Bureau (CFPB): The CFPB applies the Equal Credit Opportunity Act (ECOA) and the Fair Credit Reporting Act (FCRA) to AI models used in lending and credit scoring. It requires “adverse action notices” to consumers, providing specific reasons for credit denial or other adverse decisions, even when complex AI algorithms are involved. The CFPB emphasizes that nontraditional factors used by AI models present elevated risks if they do not align with consumer “expectations” about traditional credit underwriting criteria.

- Equal Employment Opportunity Commission (EEOC): While Biden-era guidance on AI in the workplace was removed from agency websites following a change in administration, existing federal anti-discrimination laws, such as Title VII of the Civil Rights Act and the Americans with Disabilities Act (ADA), remain fully applicable to AI-driven hiring and workplace decision-making. Employers remain liable for disparate impact discrimination and disability discrimination, even if the bias is unintentional or the AI tool was developed and implemented by a third-party vendor.

- Gramm-Leach-Bliley Act (GLBA): This federal law requires financial institutions to safeguard sensitive customer data through a comprehensive information security program, known as the Safeguards Rule. Key requirements include appointing a qualified individual to oversee the program, identifying and assessing risks, implementing robust safeguards, continuous monitoring and testing, staff training, rigorous vendor oversight (including BAAs), and a comprehensive incident response plan. AI-enhanced identity governance solutions are increasingly utilized to improve GLBA compliance by identifying anomalous access patterns and streamlining access reviews.

Here is a summary of key US Federal Agencies and their AI regulatory focus:

Table 2: Key US Federal Agencies and Their AI Regulatory Focus

| Federal Agency | Primary Regulatory Focus (for AI) | Relevant Laws/Guidelines | Examples of Enforcement/Guidance |

| FTC | Consumer protection, deceptive claims, bias, privacy | FTC Act Section 5 | Rite Aid facial recognition misuse, deceptive AI claims (Workado, Cleo AI) |

| DOJ | Civil rights, criminal misuse, bias in law enforcement | Civil Rights Act, ADA, False Claims Act | Algorithmic bias in housing, tougher sentences for AI misuse, civil rights fraud initiative |

| HHS/OCR | Healthcare AI, HIPAA compliance, non-discrimination | HIPAA, ACA Section 1557 | Nondiscrimination in patient care, HIPAA compliance for AI systems |

| CFPB | Financial services, credit scoring, fair lending | FCRA, ECOA | Adverse action notices for AI credit decisions, scrutiny of non-traditional data factors |

| EEOC | Employment discrimination, bias in hiring | Title VII, ADA | Disparate impact in AI hiring, liability for vendor tools |

Key Divergences and Convergences: EU vs. US Regulatory Philosophies

The distinct approaches to AI regulation in the European Union and the United States reflect deeply rooted differences in their legal traditions, societal values, and economic priorities. Understanding these fundamental divergences, alongside nascent areas of convergence, is crucial for developing a coherent global AI strategy.

Proactive vs. Reactive: Fundamental Differences in Regulatory Philosophy

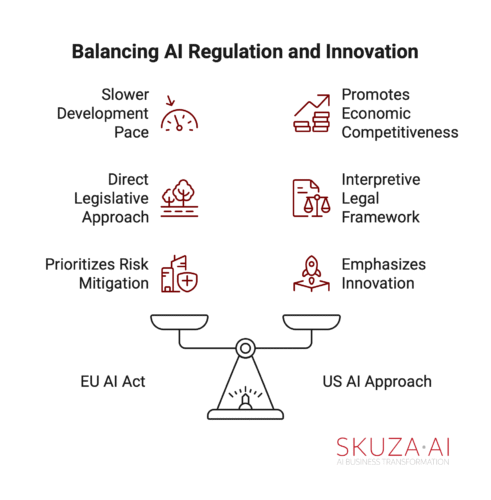

The EU AI Act represents a “proactive” and comprehensive legal framework specifically designed to regulate AI, aiming to shape the market and mitigate risks before they manifest. Its foundation is rooted in “democratic oversight and rights-based values”, emphasizing pre-emptive ethical and safety considerations. This approach seeks to establish clear boundaries and obligations upfront, providing a structured environment for AI development and deployment within the EU.

In contrast, the US adopts a more “reactive” and decentralized approach, primarily relying on adapting existing laws and issuing voluntary guidelines. The US strategy prioritizes fostering innovation and allowing market forces to drive technological development, with enforcement actions typically occurring after harms or deceptive practices are identified. This fundamental philosophical divide reflects differing societal priorities: the EU prioritizes fundamental rights, safety, and ethical considerations through pre-emptive, binding regulation, while the US prioritizes economic competitiveness and rapid innovation, addressing risks primarily through post-hoc enforcement and voluntary industry standards. This divergence directly influences the speed of AI deployment, the nature of compliance burdens, and the overall risk appetite for AI innovation in each region.

Risk Classification and Scope: Standardized EU Categories versus a Lack of Unified US Framework

A significant practical divergence lies in risk classification. The EU AI Act provides a clear, harmonized, and tiered risk classification system (Unacceptable, High, Limited, Minimal), which offers a predictable and standardized framework for businesses operating across its 27 member states. This allows companies to understand their obligations based on the inherent risk of their AI systems.

Conversely, the US federal landscape lacks a unified, comprehensive risk-based framework for AI. While some states, like Colorado, are adopting high-risk classifications with stringent obligations, others, such as Texas, explicitly choose not to categorize AI systems by risk level. The absence of a consistent, federally mandated AI risk classification in the US means that multinational businesses must undertake their own complex, multi-jurisdictional risk assessments to navigate disparate state-level definitions. This lack of uniformity can lead to higher internal compliance costs, greater legal uncertainty, and a less streamlined approach compared to the EU’s more standardized and predictable framework.

Compliance Burden and Operational Impact: Centralized EU Compliance vs. Navigating a Fragmented US Landscape

The operational impact of these differing philosophies is profound. The EU AI Act, despite its stringent requirements, offers a single, harmonized legal framework that applies consistently across all 27 member states. Once a business understands and implements compliance measures for the EU AI Act, it can apply those standards across the entire bloc, potentially simplifying long-term compliance management. The initial investment in compliance may be substantial, but the uniformity across the region offers scalability.

In contrast, the US’s “patchwork” of federal agency guidance, diverse state-level laws, and local ordinances creates a highly complex and potentially conflicting compliance burden for companies operating nationwide. This fragmentation necessitates “careful, jurisdiction-specific planning”, and executives anticipate that complying with this evolving landscape will significantly increase technology costs, requiring outside counsel and continuous updates to practices. While the EU’s framework is more prescriptive, its uniformity across member states may ultimately lead to a more predictable and scalable compliance strategy for businesses. In contrast, the dynamic and fragmented US landscape demands constant monitoring, adaptation, and potentially distinct operational procedures for each jurisdiction, leading to higher ongoing operational complexity and legal overhead.

Data Governance, Privacy, and Transparency: Contrasting Requirements and Approaches

Data governance, privacy, and transparency are central to both regulatory environments, but their integration and emphasis differ. In the EU, the AI Act explicitly integrates robust data governance and management processes as a core requirement for high-risk AI systems. Transparency is central, mandating disclosure of training data sources, methodologies, and clear labeling of AI-generated content. Privacy by design is a foundational principle embedded in the development process. The EU’s holistic integration of data governance and transparency directly within its AI regulatory framework creates a more coherent and integrated compliance challenge.

In the US, data privacy is primarily addressed through a mosaic of sector-specific laws, such as HIPAA for healthcare and GLBA for financial institutions. Transparency requirements are generally narrower and often limited to specific sectors or government entities (e.g., healthcare providers in Texas). While the NIST AI RMF promotes transparency and privacy as best practices, its adoption remains voluntary at the federal level. US companies must navigate disparate privacy and data security laws that were not originally designed with AI in mind, potentially leading to compliance gaps, overlaps, or inefficiencies when applying them to novel AI systems.

Addressing Bias and Discrimination: Explicit EU Prohibitions versus Reliance on Existing US Civil Rights Laws

The approach to algorithmic bias and discrimination also highlights a significant divergence. The EU AI Act takes a direct approach by explicitly banning AI systems that pose “unacceptable risk” due to their discriminatory potential (e.g., social scoring, biometric categorization based on protected characteristics). Furthermore, high-risk systems are mandated to use high-quality datasets to minimize discriminatory outcomes. This direct legislative approach offers clearer, AI-specific guidelines.

The US primarily relies on existing civil rights and anti-discrimination laws, such as Title VII of the Civil Rights Act, the Americans with Disabilities Act (ADA), the Equal Credit Opportunity Act (ECOA), and the Fair Housing Act, which are enforced by agencies like the EEOC, the DOJ Civil Rights Division, and the CFPB. While these laws are applied to AI, they were not specifically designed for algorithmic bias, requiring agencies to interpret and apply them to new AI contexts. Some states, like Colorado, are beginning to mandate bias audits for high-risk AI systems. This reliance on interpreting existing, broader anti-discrimination laws creates a more ambiguous and potentially reactive environment for addressing algorithmic discrimination, placing a greater burden on companies to proactively identify and mitigate bias without explicit AI-tailored federal guidance.

Innovation vs. Regulation: Balancing Economic Competitiveness with Ethical Safeguards

The differing emphasis on innovation versus regulation significantly shapes the competitive landscape for AI development and deployment. While the EU acknowledges the importance of promoting innovation, the AI Act explicitly prioritizes mitigating risks to human rights, safety, and democratic values through stringent and comprehensive regulation. This reflects a belief that trustworthy AI will ultimately foster sustainable innovation, even if it means a slower initial pace of deployment.

The US strongly emphasizes fostering AI innovation and economic competitiveness, with recent federal executive orders promoting a “pro-innovation” and “pro-business AI ecosystem” and focusing on “removing barriers to American AI innovation”. The failure of broad state AI acts is partly due to “innovation concerns,” which further underscores this priority. EU-based companies might face higher initial compliance costs and slower market entry, but could gain a “trustworthy AI” competitive advantage and enhanced consumer trust. Conversely, US companies might innovate and deploy AI faster but could face higher litigation risks, unpredictable enforcement actions, and potential reputational damage due to less prescriptive, reactive regulation.

Strategic Imperatives for C-Level Executives: Navigating the Global AI Regulatory Maze

Given the complex and divergent global AI regulatory landscape, C-level executives must adopt a proactive and sophisticated strategy to manage risks, ensure compliance, and maintain competitive advantage.

Conducting a Comprehensive AI Risk Assessment: Identifying and Classifying AI Use Cases Across the Organization

A thorough internal AI risk assessment is paramount. This is particularly critical given the EU’s risk-based approach and the emerging high-risk classifications in some US states, such as Colorado. Organizations should consider leveraging the principles outlined in the NIST AI RMF, specifically its Govern, Map, Measure, and Control components, to systematically identify and evaluate AI risks. Even though the NIST framework is voluntary, its alignment with best practices and its potential to serve as an affirmative defense in some US contexts (e.g., Texas’s TRAIGA) make it a valuable tool. A single, global AI risk assessment framework, potentially based on principles like NIST AI RMF, can provide a robust baseline for compliance across both the EU and the US. This approach helps identify common risk denominators, such as bias, privacy, and security, that are regulated in both jurisdictions, albeit through different mechanisms. This allows for a more efficient “compliance-by-design” strategy, where foundational risk controls are embedded from the outset.

Developing a Robust AI Governance Framework: Integrating Legal, Ethical, and Operational Considerations

Beyond mere compliance, establishing a robust AI governance framework is essential. This framework should clearly delineate roles and responsibilities for AI governance, implement accountability structures, and define internal policies that align with both stringent EU mandates and diverse US agency guidelines. It is crucial that this framework incorporates responsible AI principles such as accountability, transparency, fairness, and security. Current data indicates that many organizations are lagging in deploying responsible AI practices at scale, underscoring the urgency of this development. A robust AI governance framework, especially one that integrates ethical principles, serves as a strategic differentiator. It builds consumer trust and can potentially mitigate future regulatory scrutiny by demonstrating a proactive commitment to responsible AI. This proactive stance can enhance brand reputation and consumer loyalty, yielding direct business benefits.

Ensuring Cross-Jurisdictional Compliance: Strategies for Managing Divergent Requirements

Navigating the global AI regulatory maze requires a strategic approach to cross-jurisdictional compliance. The “compliance-by-design” philosophy is critical: embed AI risk controls into the development lifecycle from the outset, rather than attempting to retrofit systems for different jurisdictions. This approach, while requiring upfront investment, offers long-term efficiency by building in flexibility to meet evolving global standards, effectively transforming a regulatory challenge into a foundational operational strength.

Organizations should establish a centralized AI compliance function to monitor global regulatory developments, but empower local teams to adapt policies and procedures to specific state or national requirements. This hybrid model allows for consistent adherence to overarching principles while maintaining agility for regional nuances. Rigorous vendor management is also paramount. Due diligence and robust Business Associate Agreements (BAAs) are critical, especially when third-party AI tools handle sensitive data, as deployers can be held responsible for vendor-related discrimination or breaches.

Mitigating Legal, Financial, and Reputational Risks: Proactive Measures and Incident Response

Proactive investment in AI risk management is not merely a cost center; it is a strategic investment that directly reduces the likelihood of costly legal battles, significant fines, and severe reputational damage—all top concerns for C-level executives. This includes implementing continuous monitoring and regular audits for bias, accuracy, robustness, and security vulnerabilities. These measures are crucial for complying with EU high-risk system requirements and for mitigating liability under US anti-discrimination laws.

Furthermore, ensuring appropriate human oversight and “human-in-the-loop” mechanisms is vital to prevent or minimize risks and allow for the override of AI decisions when necessary. Transparency and disclosure are equally important: clearly communicate AI use to affected individuals and stakeholders, adhering to specific disclosure obligations (e.g., for chatbots and AI-generated content). Finally, developing robust incident response plans tailored to AI-related breaches or harms, including prompt notification requirements, is essential to contain and mitigate potential damage.

Fostering a Culture of Responsible AI: Building Trust and Competitive Advantage

Moving beyond mere compliance to fostering a genuine “culture of responsible AI” can transform regulatory burdens into a competitive advantage. This involves investing in AI literacy and training programs for all staff, from technical teams to legal and clinical personnel, to ensure a comprehensive understanding of AI mandates, ethical principles, and proper AI utilization. Establishing AI ethics boards or multidisciplinary teams to guide ethical development and deployment can institutionalize this commitment. This proactive approach to responsible AI enhances brand reputation, attracts top talent, and builds deeper trust with customers and regulators in a rapidly evolving market, providing a long-term strategic benefit. Compliance, in this context, evolves from a burden into a distinction from a competitive standpoint.

Conclusion: Charting a Course for Responsible AI in a Regulated World

The global AI regulatory landscape is undeniably complex, characterized by the EU’s comprehensive, rights-based framework and the US’s fragmented yet actively evolving approach. This environment demands continuous vigilance and strategic planning from C-level executives. Proactive engagement with AI governance is not simply a compliance exercise; it is a fundamental business imperative that directly impacts market access, financial stability, and brand reputation.

While significant divergence currently exists between the EU and US models, there are emerging signs of convergence. International bodies such as the OECD, G7, and G20 are developing shared AI principles and technical standards that align on concepts like fairness, transparency, and safety. The EU-US Trade and Technology Council is also actively working towards shared definitions and standards. This interplay between global harmonization efforts and persistent regional divergence means that C-level executives must adopt a “dual-track” strategy. This involves actively participating in and influencing global standards where possible, while simultaneously preparing for a complex, multi-jurisdictional compliance reality for the foreseeable future. Without a singular global consensus, companies must remain prepared for continued regulatory fragmentation and adopt “compliance-by-design” strategies to navigate multiple, potentially conflicting, regimes. The ability to responsibly develop, deploy, and govern AI systems across these diverse regulatory environments will be a defining characteristic of successful global enterprises in the coming decade.

Works cited

- Leaders Increasingly Concerned About AI Adoption Risks, accessed July 6, 2025, https://www.rmmagazine.com/articles/article/2025/07/01/leaders-increasingly-concerned-about-ai-adoption-risks

- EY survey: AI adoption outpaces governance as risk awareness among the C-suite remains low, accessed July 6, 2025, https://www.ey.com/en_br/newsroom/2025/06/ey-survey-ai-adoption-outpaces-governance-as-risk-awareness-among-the-c-suite-remains-low

- Governing the Algorithms: The EU AI Act and Global Regulatory Divergence – Coforge, accessed July 6, 2025, https://www.coforge.com/what-we-know/blog/governing-the-algorithms-the-eu-ai-act-and-global-regulatory-divergence

- EU AI Act: Summary & Compliance Requirements – ModelOp, accessed July 6, 2025, https://www.modelop.com/ai-governance/ai-regulations-standards/eu-ai-act

- Divergent Paths on Regulating Artificial Intelligence | Littler, accessed July 6, 2025, https://www.littler.com/news-analysis/asap/divergent-paths-regulating-artificial-intelligence

- AI Act | Shaping Europe’s digital future – European Union, accessed July 6, 2025, https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

- Why is Transparency the Key to AI Compliance under the EU AI Act? – Open Ethics Initiative, accessed July 6, 2025, https://openethics.ai/why-is-transparency-the-key-to-ai-compliance-under-the-eu-ai-act/

- Article 26: Obligations of Deployers of High-Risk AI Systems | EU Artificial Intelligence Act, accessed July 6, 2025, https://artificialintelligenceact.eu/article/26/

- Introduce obligations on users of high-risk AI systems – European Digital Rights (EDRi), accessed July 6, 2025, https://edri.org/wp-content/uploads/2022/05/Obligations-on-users-AIA-Amendments-17022022.pdf

- auditboard.com, accessed July 6, 2025, https://auditboard.com/blog/eu-ai-act#:~:text=Transparency%20Obligations%20and%20Documentation,outputs%20are%20traceable%20and%20explainable.

- Second-order impacts of civil artificial intelligence regulation on defense: Why the national security community must engage – Atlantic Council, accessed July 6, 2025, https://www.atlanticcouncil.org/in-depth-research-reports/report/second-order-impacts-of-civil-artificial-intelligence-regulation-on-defense-why-the-national-security-community-must-engage/

- THE U.S. APPROACH TO AI REGULATION: FEDERAL LAWS, POLICIES,AND STRATEGIES EXPLAINED, accessed July 6, 2025, https://scholarlycommons.law.case.edu/cgi/viewcontent.cgi?article=1172&context=jolti

- The Federal AI Moratorium is DOA; What’s Next for State AI Regulation?, accessed July 6, 2025, https://www.debevoise.com/insights/publications/2025/07/the-federal-ai-moratorium-is-doa-whats-next

- A Red State Model for Comprehensive AI Laws: Texas Enacts the Responsible Artificial Intelligence Governance Act. – Moore & Van Allen, accessed July 6, 2025, https://www.mvalaw.com/data-points/a-red-state-model-for-comprehensive-ai-laws-texas-enacts-the-responsible-artificial-intelligence-governance-act

- Executives expect complying with AI regulations will increase tech costs – CIO Dive, accessed July 6, 2025, https://www.ciodive.com/news/enterprise-cost-increase-ai-regulation-security-data/724345/

- Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence, accessed July 6, 2025, https://www.federalregister.gov/documents/2023/11/01/2023-24283/safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence

- Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence | NIST, accessed July 6, 2025, https://www.nist.gov/artificial-intelligence/executive-order-safe-secure-and-trustworthy-artificial-intelligence

- The Impact of AI Executive Order’s Revocation Remains Uncertain, but New Trump EO Points to Path Forward, accessed July 6, 2025, https://www.hunton.com/privacy-and-information-security-law/the-impact-of-ai-executive-orders-revocation-remains-uncertain-but-new-trump-eo-points-to-path-forward

- U.S. AI Action Plan: Recommendations to the National Science Foundation and the Office of Science and Technology Policy – ORF Middle East, accessed July 6, 2025, https://orfme.org/research/u-s-ai-action-plan-recommendations-to-the-national-science-foundation-and-the-office-of-science-and-technology-policy/

- Executive Order on Advancing United States Leadership in Artificial Intelligence Infrastructure – Biden White House, accessed July 6, 2025, https://bidenwhitehouse.archives.gov/briefing-room/presidential-actions/2025/01/14/executive-order-on-advancing-united-states-leadership-in-artificial-intelligence-infrastructure/

- Executive Order on Advancing United States Leadership in Artificial Intelligence Infrastructure | SemiWiki, accessed July 6, 2025, https://semiwiki.com/forum/threads/executive-order-on-advancing-united-states-leadership-in-artificial-intelligence-infrastructure.21861/

- White House Issues Guidance on Use and Procurement of Artificial Intelligence Technology, accessed July 6, 2025, https://www.ropesgray.com/en/insights/alerts/2025/04/white-house-issues-guidance-on-use-and-procurement-of-artificial-intelligence-technology

- Artificial Intelligence Risk Management Framework – Regulations.gov, accessed July 6, 2025, https://www.regulations.gov/document/NIST-2021-0004-0001

- NIST AI Risk Management Framework (AI RMF) – Palo Alto Networks, accessed July 6, 2025, https://www.paloaltonetworks.com/cyberpedia/nist-ai-risk-management-framework

- What is NIST AI Risk Management Framework (AI RMF)? – StandardFusion, accessed July 6, 2025, https://www.standardfusion.com/blog/what-is-the-nist-ai-risk-management-framework-(ai-rmf)

- Navigating TRAIGA: Texas’s New AI Compliance Framework | Insights | Ropes & Gray LLP, accessed July 6, 2025, https://www.ropesgray.com/en/insights/alerts/2025/06/navigating-traiga-texas-new-ai-compliance-framework

- While Congress Mulls AI Law Pause, What Are The States Doing? A Current Overview of AI Regulation Across the Country | Fisher Phillips, accessed July 6, 2025, https://www.fisherphillips.com/en/news-insights/a-current-overview-of-ai-regulation-across-the-country.html

- The states are stepping up on health AI regulation | American Medical Association, accessed July 6, 2025, https://www.ama-assn.org/practice-management/digital-health/states-are-stepping-health-ai-regulation

- What Happened to the Big Beautiful Bill’s AI Regulation Enforcement Pause? – Orrick, accessed July 6, 2025, https://www.orrick.com/en/Insights/2025/07/What-Happened-to-the-Big-Beautiful-Bills-AI-Regulation-Enforcement-Pause

- Artificial Intelligence in Hiring: Diverging Federal, State Perspectives on AI in Employment?, accessed July 6, 2025, https://www.hklaw.com/en/insights/publications/2025/03/artificial-intelligence-in-hiring-diverging-federal-state-perspectives

- AI and Workplace Discrimination: What Employers Need to Know after the EEOC and DOL Rollbacks | Husch Blackwell, accessed July 6, 2025, https://www.huschblackwell.com/newsandinsights/ai-and-workplace-discrimination-what-employers-need-to-know-after-the-eeoc-and-dol-rollbacks

- FTC Evaluating Deceptive Artificial Intelligence Claims | Insights – Holland & Knight, accessed July 6, 2025, https://www.hklaw.com/en/insights/publications/2025/06/ftc-evaluating-deceptive-artificial-intelligence-claims

- AI and the Risk of Consumer Harm | Federal Trade Commission, accessed July 6, 2025, https://www.ftc.gov/policy/advocacy-research/tech-at-ftc/2025/01/ai-risk-consumer-harm

- DOJ Signals Tough Stance on Crimes Involving Misuse of Artificial Intelligence – Wiley Rein, accessed July 6, 2025, https://www.wiley.law/alert-DOJ-Signals-Tough-Stance-on-Crimes-Involving-Misuse-of-Artificial-Intelligence

- Artificial Intelligence and Civil Rights – Department of Justice, accessed July 6, 2025, https://www.justice.gov/archives/crt/ai

- Justice Department Establishes Civil Rights Fraud Initiative, Using False Claims Act to Target DEI | Inside Government Contracts, accessed July 6, 2025, https://www.insidegovernmentcontracts.com/2025/05/justice-department-establishes-civil-rights-fraud-initiative-using-false-claims-act-to-target-dei/

- OCR Issues Guidance on AI in Health Care – New York State Dental Association, accessed July 6, 2025, https://www.nysdental.org/news-publications/news/2025/01/11/ocr-issues-guidance-on-ai-in-health-care

- HHS Recent Guidance on AI Use in Health Care | Health Industry Washington Watch, accessed July 6, 2025, https://www.healthindustrywashingtonwatch.com/2025/01/articles/regulatory-developments/hhs-developments/office-for-civil-rights-hhs-developments/hhs-recent-guidance-on-ai-use-in-health-care/

- HIPAA Compliance AI in 2025: Critical Security Requirements You …, accessed July 6, 2025, https://www.sprypt.com/blog/hipaa-compliance-ai-in-2025-critical-security-requirements

- AI in healthcare and what it means for HIPAA – Accountable HQ, accessed July 6, 2025, https://www.accountablehq.com/post/ai-and-hipaa

- CFPB Applies Adverse Action Notification Requirement to Artificial Intelligence Models, accessed July 6, 2025, https://www.skadden.com/insights/publications/2024/01/cfpb-applies-adverse-action-notification-requirement

- Adverse Action Notice Compliance Considerations for Creditors That Use AI, accessed July 6, 2025, https://www.americanbar.org/groups/business_law/resources/business-law-today/2023-november/adverse-action-notice-compliance-considerations-for-creditors-that-use-ai/

- Gramm-Leach-Bliley Act | Federal Trade Commission, accessed July 6, 2025, https://www.ftc.gov/business-guidance/privacy-security/gramm-leach-bliley-act

- GLBA Compliance Requirements – A Complete Checklist – Securiti.ai, accessed July 6, 2025, https://securiti.ai/glba-compliance-requirements/

- GLBA Compliance: Essential Steps for Financial Institutions | Transcend | Data Privacy Infrastructure, accessed July 6, 2025, https://transcend.io/blog/gramm-leach-bliley-act

- The GLBA Safeguards Rule: What You Need to Know – Virtru, accessed July 6, 2025, https://www.virtru.com/blog/compliance/glba/safeguards-rule

- Gramm-Leach-Bliley Act in Action: 7 Identity Management Cases, accessed July 6, 2025, https://www.avatier.com/blog/identity-management-case-studies/

- Federal AI Mandates and Corporate Compliance: What’s Changing in 2025, accessed July 6, 2025, https://www.cogentinfo.com/resources/federal-ai-mandates-and-corporate-compliance-whats-changing-in-2025

- C-suite concern over generative AI regulation intensifies: IBM – CIO Dive, accessed July 6, 2025, https://www.ciodive.com/news/executives-concerned-ai-regulation-adoption-barrier-IBM/724905/

- OECD AI Principles overview, accessed July 6, 2025, https://oecd.ai/en/ai-principles