Why does providing a proper customer service require metrics?

AI projects can be complex, and their outcomes can be challenging to quantify. With clear success metrics in place, it becomes possible to assess these initiatives’ true value and impact. Poorly defined or misaligned metrics can lead to false conclusions, wasted resources, and missed opportunities.

Establishing well-defined success metrics from the outset is crucial for several reasons:

- Aligning AI efforts with strategic business goals

- Setting realistic expectations and benchmarks

- Enabling data-driven decision-making and course corrections

- Measuring return on investment (ROI) and justifying resource allocation

- Fostering accountability and continuous improvement

Implementing AI in customer service can revolutionize how businesses interact with their customers, but establishing well-defined success metrics from the outset is crucial for achieving the desired outcomes. Organizations can ensure that their AI customer support software enhances the overall customer service experience by aligning AI efforts with strategic business goals. This alignment involves setting realistic expectations and benchmarks for customer satisfaction scores, response times, and resolution rates, enabling data-driven decision-making and course corrections throughout the implementation process.

Moreover, well-defined success metrics allow companies to measure their AI initiatives’ return on investment (ROI) and justify resource allocation to stakeholders. This measurement is critical when deploying AI in customer service, as it often requires significant technological, training, and infrastructure investments. By demonstrating the tangible benefits of AI, such as increased efficiency, reduced costs, and improved customer loyalty, organizations can build a strong case for continued investment in this area.

Understanding the direct consequences of customer interactions on business outcomes is crucial. Research indicates a significant impact of customer experience on purchasing decisions. For instance, a study found that 1 in 6 shoppers leave a purchase due to bad customer experience. This statistic highlights the critical importance of investing in AI technologies that can significantly improve customer service interactions, customer satisfaction, and loyalty.

Furthermore, establishing clear success metrics fosters accountability and continuous improvement among customer service professionals and support teams. By setting specific targets for key performance indicators (KPIs) such as first contact resolution rates and customer feedback scores, businesses can motivate their support agents to deliver exceptional service consistently. This data-driven approach also enables organizations to identify areas for improvement and implement targeted training programs to enhance the skills of their customer service agents.

In addition to these benefits, well-defined success metrics can help businesses optimize their AI customer support software over time. Organizations can gain valuable insights into customer preferences, common issues, and emerging trends by analyzing customer interactions and leveraging machine learning algorithms. This knowledge can refine AI models, improve the accuracy of automated responses, and deliver more personalized customer service experiences.

Establishing well-defined success metrics is essential for maximizing AI’s potential in customer service. By aligning AI initiatives with business objectives, setting realistic benchmarks, and fostering a culture of continuous improvement, organizations can unlock the full potential of this transformative technology and deliver unparalleled support to their customers.

Common challenges in AI customer service

Despite its importance, defining success metrics for AI projects can be challenging. Some common hurdles organizations face include:

- Complexity and uncertainty of AI technologies

- Difficulties in quantifying intangible benefits (e.g., customer satisfaction, brand value)

- Lack of historical data or benchmarks for comparison

- Conflicting priorities and stakeholder expectations

- Rapidly evolving AI landscape and shifting goalposts

Nowadays artificial intelligence has emerged as a game-changing technology promising to revolutionize industries and transform organizations’ operations. However, as more and more companies embark on AI projects, they are faced with the critical task of defining success metrics that accurately reflect the impact of their initiatives.

The complexity and uncertainty of artificial intelligence technologies pose a significant challenge. Unlike traditional software systems, artificial intelligence operates in dynamic and often unpredictable environments, making it difficult to forecast its performance accurately. This inherent complexity can lead to a lack of clarity when setting expectations and measuring the success of artificial intelligence projects.

Moreover, many of the benefits of artificial intelligence, such as improved customer satisfaction, increased brand value, and enhanced decision-making capabilities, are intangible and not easily quantified. While these benefits are undoubtedly valuable, they can be challenging to measure and translate into concrete metrics demonstrating artificial intelligence initiatives’ ROI.

The emphasis on customer experience is supported by recent research indicating that most customers consider their experience with a company to be as critical as the products or services offered. This statistic highlights the importance of integrating AI to enhance customer interactions and satisfaction.

Another obstacle organizations face when defining success metrics for artificial intelligence projects is the lack of historical data or established benchmarks for comparison. As artificial intelligence continues to evolve at an unprecedented pace, it can be difficult to find relevant data points or industry standards against which to evaluate the performance of current artificial intelligence systems. This lack of historical context can make it challenging to set realistic targets and accurately gauge the success of artificial intelligence projects.

Furthermore, conflicting priorities and stakeholder expectations can complicate defining success metrics. Different departments within an organization may have varying goals and objectives, leading to a lack of alignment on what constitutes success for the artificial intelligence project. For example, the sales team may prioritize increased revenue, while the customer service department may focus on improving customer satisfaction scores. Reconciling these different perspectives and reaching a consensus on success metrics can be complex and time-consuming.

Finally, the rapidly evolving nature of the artificial intelligence landscape means that success metrics must be continuously updated and adapted to remain relevant and meaningful over time. As new artificial intelligence technologies emerge and business requirements change, organizations must prepare to reassess their metrics and adjust their strategies accordingly. This preparation requires a proactive and agile approach to metric definition and a willingness to embrace change and continuously iterate on success criteria.

Aligning AI Metrics with Business Objectives

To ensure that AI projects deliver meaningful value, their success metrics must closely align with the organization’s overall business objectives. This alignment requires a thorough understanding of the company’s strategic priorities, key performance indicators, and desired outcomes.

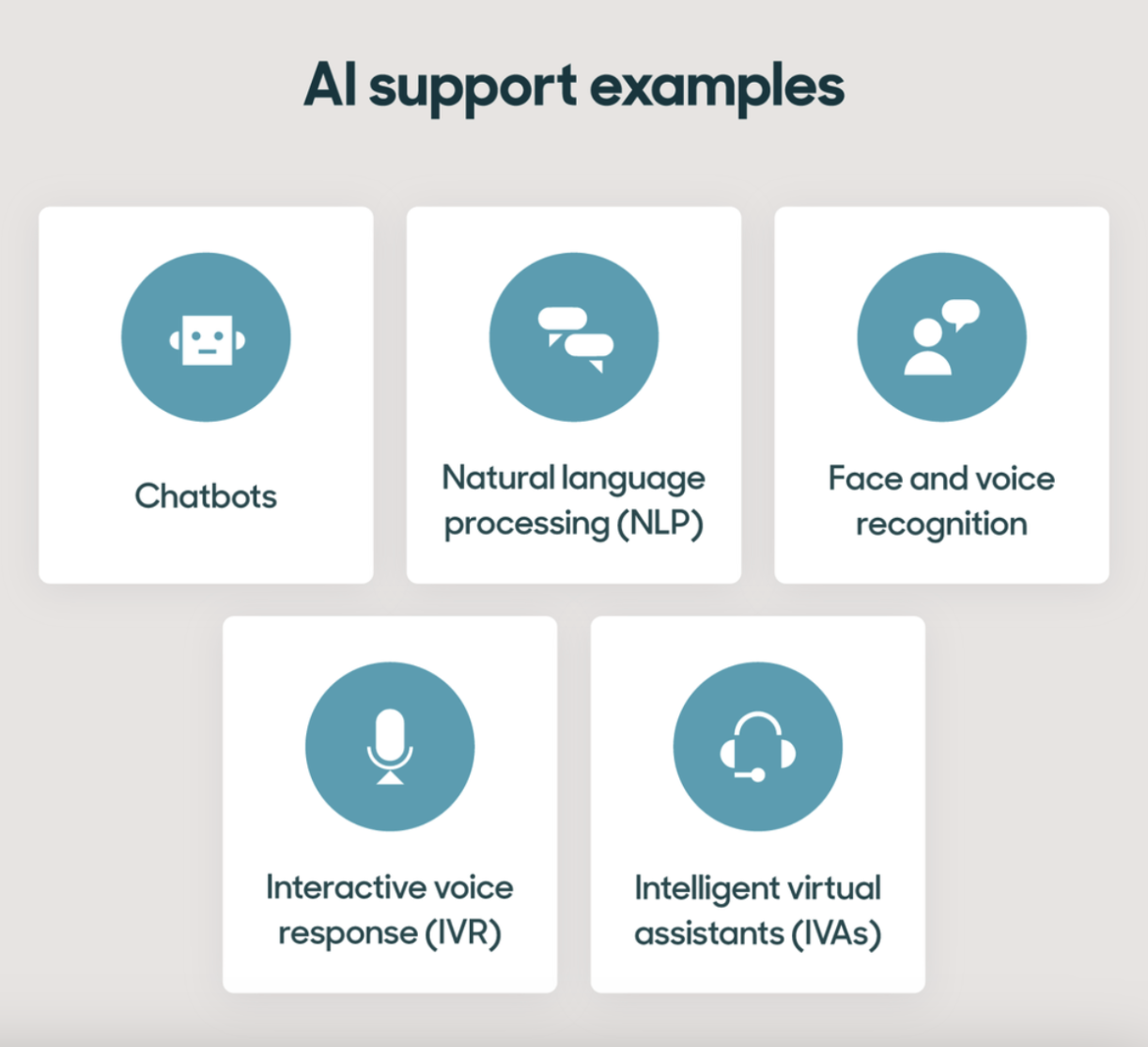

One key area in which customer support AI can significantly impact is customer support. By leveraging AI-powered self-service options, such as chatbots and virtual assistants, businesses can reduce the workload on human agents and improve the overall agent efficiency of their customer support teams.

To measure the success of these initiatives, organizations should track metrics such as the deflection rate, which represents the percentage of customer inquiries resolved without human intervention. Additionally, monitoring the average handling time for remaining human-assisted interactions can provide insights into how effectively AI is streamlining the support process.

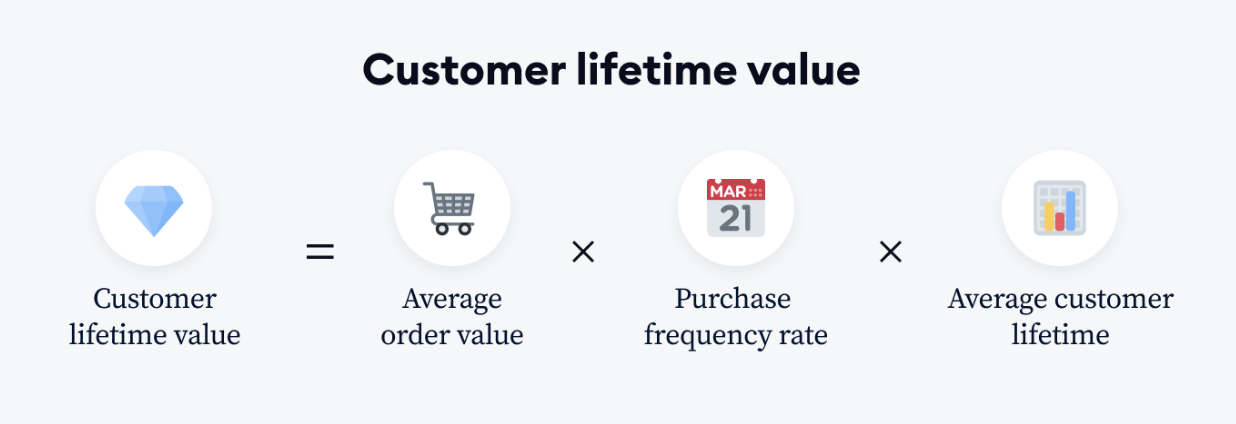

Another crucial aspect of aligning AI success metrics with business objectives is understanding the impact of these technologies on customer behavior and purchase history. For example, if the goal is to drive revenue growth through personalized product recommendations, relevant metrics could include the conversion rate for AI-recommended products, the average order value for AI-influenced transactions, and the customer lifetime value of AI-targeted segments. By analyzing these metrics, organizations can gain valuable insights into the effectiveness of their artificial intelligence initiatives in driving business results.

To achieve this alignment, organizations must thoroughly understand their strategic priorities, key performance indicators, and desired outcomes. This understanding requires close collaboration between business leaders, AI experts, and customer support teams to ensure everyone is working towards the same goals. By involving stakeholders from across the organization in defining success metrics, businesses can create a shared vision for the role of artificial intelligence in driving customer engagement and business growth.

Moreover, as AI technologies continue to evolve, organizations must remain agile and adaptable in measuring success. Organizations should regularly reassess and refine success metrics to remain relevant and aligned with changing business needs. For example, as natural language understanding capabilities improve, organizations may need to adjust their metrics to focus more on the quality and relevance of AI-generated responses rather than simply measuring the volume of customer service interactions handled by AI.

Ultimately, the key to aligning AI success metrics with business objectives is a holistic view of the customer experience. Businesses can develop AI solutions that deliver meaningful value to both parties by considering the customer’s perspective and the organization’s goals. Whether through improved self-service options, more efficient agent interactions, or personalized product recommendations, artificial intelligence can transform how organizations engage with their customers and drive long-term business success.

Identifying Key Performance Indicators

When defining success metrics for AI projects, it is crucial to ensure that they are:

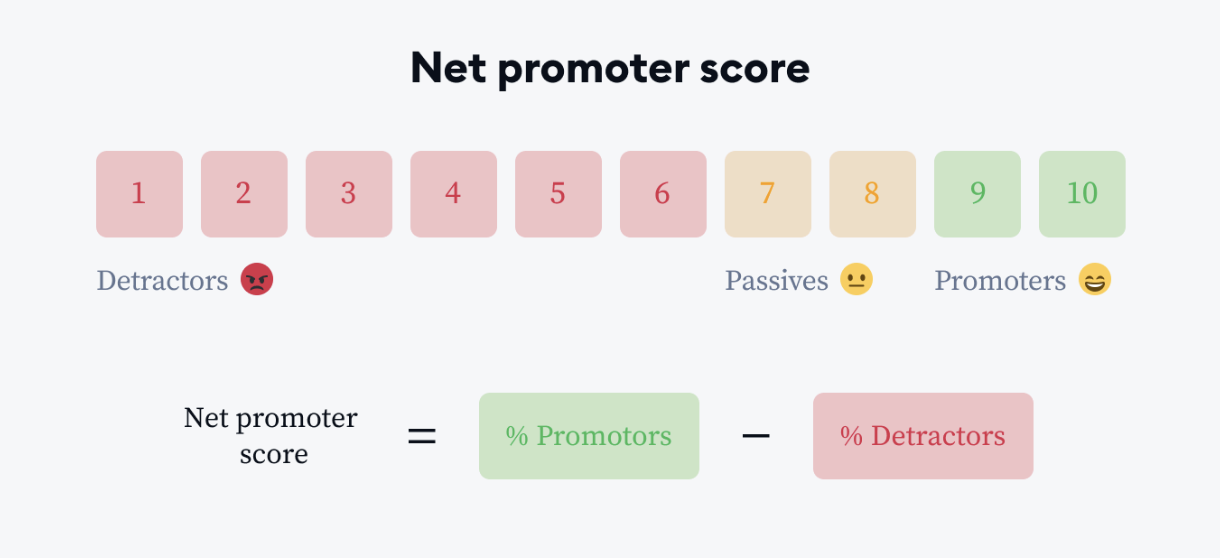

- Relevant: The chosen metrics should be directly tied to the AI initiative’s desired outcomes and business objectives. They should reflect the specific goals the organization aims to achieve through the implementation of AI. For example, if the primary purpose is to improve customer satisfaction, relevant metrics might include customer satisfaction scores (CSAT), net promoter scores (NPS), or customer effort scores (CES). If the goal is to increase operational efficiency, relevant metrics could be the reduction in average handle time (AHT) for customer support inquiries or the percentage of tasks automated through AI.

- Measurable: Success metrics must be quantifiable and trackable over time, which allows organizations to monitor progress, identify trends, and make data-driven decisions based on the performance of the AI system. Measurable metrics should have clear definitions and standardized calculation methods to ensure consistency and comparability. For instance, if the aim is to reduce customer churn, a measurable metric would be the churn rate, calculated as the percentage of customers who discontinue their relationship with the company within a specific time frame.

- Achievable: The selected metrics should be realistic and within the project team’s control. Setting overly ambitious or unrealistic targets can demotivate the team and lead to disappointment if unmet. When defining achievable metrics, consider factors such as the current performance baseline, available resources, and the expected impact of the AI solution. For example, if the current first call resolution (FCR) rate for customer support is 60%, setting a target of 65% within the first quarter of AI implementation may be achievable, whereas aiming for 90% might be unrealistic.

- Time-bound: Success metrics should be evaluated at pre-determined intervals, such as monthly or quarterly, to ensure regular monitoring and timely course corrections. Setting specific time frames for measuring progress helps maintain accountability and keeps the project on track. Time-bound metrics also provide opportunities to celebrate milestones and make necessary adjustments based on performance. For example, suppose the goal is to increase sales through AI-powered product recommendations. In that case, the organization might set a target of a 10% increase in average order value (AOV) within the first six months of implementation, with monthly check-ins to monitor progress.

To further illustrate these principles, consider a scenario where an e-commerce company is implementing an AI-powered chatbot to improve customer support. Some relevant, measurable, achievable, and time-bound success metrics for this project could be:

- Relevant: Increase customer satisfaction score (CSAT) for chatbot interactions by 15% within the first quarter.

- Measurable: Reduce average handle time (AHT) for customer inquiries by 30% compared to the previous quarter.

- Achievable: Automate 50% of tier-1 support inquiries through the chatbot within the first six months.

- Time–bound: Achieve a chatbot adoption rate of 30% among customers within the first three months of launch, with monthly progress reviews.

By defining success metrics that are relevant, measurable, achievable, and time-bound, organizations can effectively track the performance of their AI initiatives, make informed decisions, and ensure that their investments in AI deliver tangible business value.

Examples of common AI project KPIs:

- Accuracy/precision of predictions or classifications

- Processing speed or throughput

- Model performance (e.g., F1 score, AUC-ROC)

- Cost savings or revenue generation

- User adoption and engagement metrics

Incorporating Quantitative and Qualitative Measures

When defining success metrics for AI projects, balancing quantitative and qualitative measures is essential. While quantitative metrics provide objective, numerical data points for tracking progress and measuring impact, qualitative metrics offer valuable context and insights into the AI solution’s performance and its effect on users.

Quantitative metrics, such as accuracy rates, cost savings, and conversion rates, are crucial for assessing the tangible benefits of an AI system. For example, in a customer support context, quantitative metrics might include the percentage of customer questions answered correctly by the AI, the reduction in average handle time, or the increase in first contact resolution rates. These metrics allow organizations to gauge the efficiency and effectiveness of their AI solution and make data-driven decisions based on its performance.

However, relying solely on quantitative metrics can leave blind spots in understanding the full impact of an AI project. Qualitative metrics, such as user satisfaction surveys, expert evaluations, and observational data, provide a more comprehensive picture of how the AI system is perceived and experienced by its users. These metrics can capture nuanced aspects of the user experience, such as the ease of use, the relevance and appropriateness of AI-generated responses, and the overall sentiment towards the AI solution.

For instance, in a customer support scenario, qualitative metrics might include feedback from customers on their satisfaction with the AI-powered support experience, observations from support agents on how the AI assists them in providing more personalized service, or insights from usability studies on how intuitive and user-friendly the AI interface is. These qualitative measures can help identify areas for improvement, uncover unintended consequences, and ensure that the AI system is meeting the needs and expectations of its users.

Moreover, qualitative metrics can be particularly valuable when dealing with semi-structured data, such as customer reviews or social media posts. By applying techniques like sentiment analysis, organizations can gain a deeper understanding of customer opinions, preferences, and pain points, which can inform the development and refinement of their AI solutions.

Organizations should incorporate quantitative and qualitative metrics to create a well-rounded approach to AI project success measurement. This holistic view allows for a more comprehensive assessment of the AI system’s performance, impact on business objectives, and user reception. By considering both types of metrics, organizations can make informed decisions, iterate on their AI solutions, and ensure that they deliver value to their customers and stakeholders.

Establishing Baselines and Tracking Progress

To measure the true impact of customer service AI initiatives, it’s essential to establish clear baselines for comparison. These baselines should reflect the pre-AI state or the current performance of existing systems or processes in handling customer queries and delivering customer support. By setting these benchmarks, organizations can accurately gauge the effectiveness of their AI implementations in improving customer service.

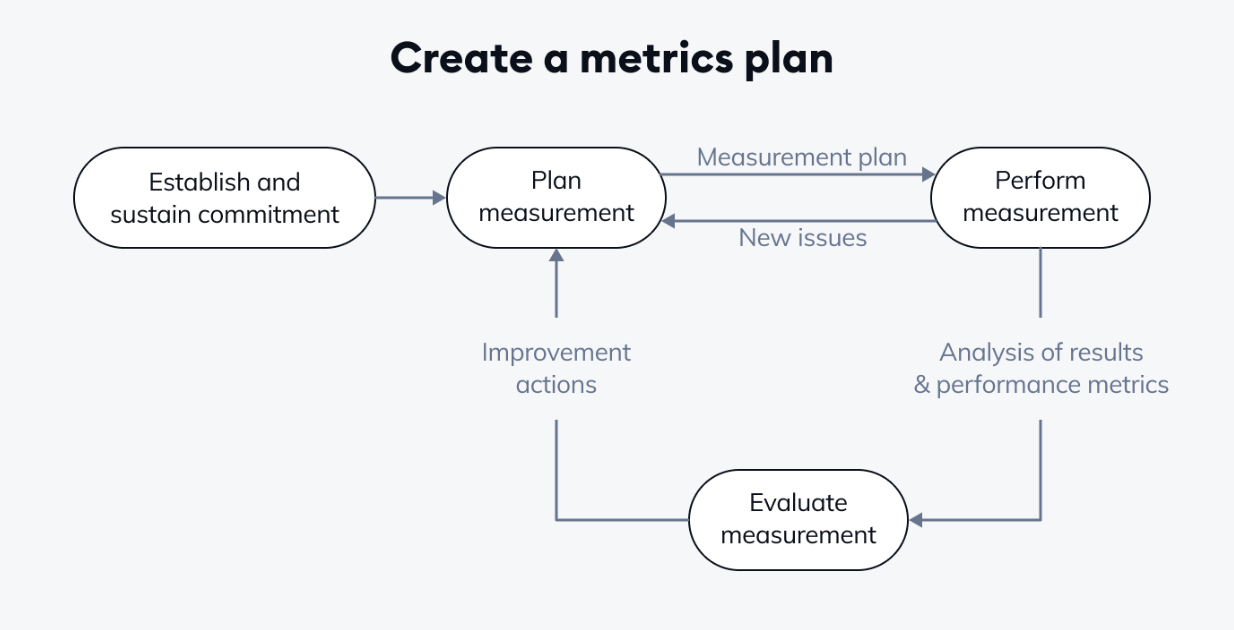

Once a business establishes baselines, success metrics should be consistently tracked and evaluated at pre-determined intervals, such as monthly, quarterly, or annually, allowing for ongoing progress monitoring, identification of trends and patterns, and data-driven decision-making. By tracking relevant metrics, organizations can assess how well their AI initiatives are performing and identify areas for improvement.

To effectively track the success of AI projects aimed at enhancing customer service, organizations can leverage various techniques and tools:

- Dashboard and reporting tools: These tools provide a centralized, visually intuitive way to monitor KPIs related to customer service AI. They can aggregate data from multiple sources, such as customer interactions, ticketing systems, and CRM platforms, to provide a comprehensive view of the AI system’s performance. Some popular dashboard and reporting tools include Tableau, PowerBI, and Google Data Studio.

- Automated data collection and integration mechanisms: These tools can streamline the process of gathering and analyzing customer data, making tracking the impact of AI initiatives easier. Organizations can consistently capture and analyze relevant data by integrating AI systems with existing customer service platforms and databases. Tools like Apache Kafka, Talend, and Fivetran can help with data integration and automated data collection.

- Regular progress reviews and retrospectives: These sessions provide opportunities for teams to discuss the successes, challenges, and lessons learned from AI projects. They can help identify best practices, address bottlenecks, and adapt the AI strategy based on real-world insights. Organizations can optimize their AI initiatives to better serve their customers by fostering a continuous improvement and learning culture. Collaboration and project management tools like Jira, Trello, and Asana can facilitate these reviews and retrospectives.

- Continuous monitoring and alerting systems: These tools can help organizations proactively identify and address issues with their customer service AI implementations. By setting up automated alerts for anomalies, such as sudden drops in performance metrics or spikes in customer complaints, teams can quickly investigate and resolve problems before they escalate. Monitoring tools like Datadog, New Relic, and Splunk can help with real-time monitoring and alerting.

- NLP tools: AI technologies like NLP can analyze unstructured customer data, such as support tickets, chat transcripts, and social media posts. By extracting insights from this data, organizations can better understand customer needs, preferences, and pain points and use this knowledge to train their AI systems to provide more accurate and relevant responses. NLP tools like spaCy, NLTK, and Google Cloud Natural Language API can assist with this analysis.

Adapting AI Success Metrics for Continuous Improvement and Alignment of Customer Service

As AI projects mature and evolve, the associated success metrics may need to be revisited and adapted to ensure they remain relevant and aligned with the project’s goals. This iterative approach is crucial for driving continuous

improvement and maximizing the value of AI investments in customer service.

Changing business priorities or market conditions is one key reason for adapting success metrics. For example, if a company initially focused on using AI to improve agent productivity by automating routine tasks but later identified customer retention as a more pressing concern, the success metrics would need to shift accordingly. In this case, new metrics might include customer satisfaction scores, churn rates, or the percentage of customer messages successfully resolved without escalation to a human agent.

Another factor that may necessitate updating success metrics is an expanded scope or pivot in the project’s direction. As organizations gain experience with AI and uncover new opportunities, they may decide to broaden the application of AI to additional customer service channels or processes. For instance, a company that started with a chatbot for handling simple customer inquiries might expand its AI initiatives to include intelligent routing of complex issues to specialized support teams. In this scenario, success metrics would need to evolve to capture the impact of AI across these new areas, such as the accuracy of routing decisions or the reduction in average handle time for escalated cases.

Lessons learned, and insights gained during the implementation process can also inform the refinement of success metrics. As organizations deploy AI solutions and gather data on their performance, they may identify new KPIs or discover that certain metrics are more meaningful than others. For example, a company might initially track the number of customer conversations handled by its AI-powered interactive voice response (IVR) system but later realize that measuring customer intent recognition accuracy provides more actionable insights for improving the system’s effectiveness.

The availability of new data sources or tracking capabilities can also drive the adaptation of success metrics. As AI technologies advance and organizations invest in more sophisticated analytics tools, they may gain access to previously untapped customer data sources or be able to track metrics at a more granular level. For instance, sentiment analysis of customer feedback across multiple channels might become possible, enabling organizations to gauge the emotional impact of their AI initiatives and identify areas where a human touch is still needed.

Maintaining an agile and iterative approach to defining and refining success metrics ensures their continued relevance and alignment with project goals. By regularly reviewing and updating these metrics, organizations can effectively leverage AI to drive continuous improvement in customer service. This action involves setting up processes for periodically assessing the appropriateness of existing metrics, incorporating new insights and data sources, and adjusting metrics as needed to reflect evolving priorities and capabilities.

Case study

The case study of Allegro’s integration of a Machine Learning (ML)-powered Visual Search feature seamlessly encapsulates the core principles of aligning AI initiatives with well-defined success metrics, a theme central to enhancing AI’s role in customer service. Allegro’s journey over two years highlights the imperative to establish clear metrics that track technological effectiveness and measure improvements in user experience and satisfaction. Their meticulous approach, from the introduction of the Visual Search to iterative user feedback and continuous adaptation, exemplifies how to strategically harness AI to meet customer needs and business objectives effectively.

Initially, Allegro set specific, measurable goals for the Visual Search feature, aiming to reduce customers’ time finding products and increase overall transaction values. By continuously tracking these metrics, Allegro could make data-driven decisions that significantly refined their AI tool’s accuracy and user interaction quality. This ongoing process helped optimize the feature and aligned with broader business goals such as cost reduction and enhanced customer loyalty. The success of Allegro’s project, underscored by increased impulsive purchases and larger average cart size, stands as a robust example of how well-defined metrics are instrumental in realizing the potential of AI to transform customer interactions. This case study reaffirms the necessity of integrating AI solutions with a deep understanding of user behavior and rigorous metric-driven strategies to achieve sustained business growth and customer satisfaction.

Conclusion

In conclusion, establishing well-defined success metrics is essential for maximizing AI’s potential in customer service. By aligning AI initiatives with business objectives, setting realistic benchmarks, and fostering a culture of continuous improvement, organizations can unlock the full potential of this transformative technology. As demonstrated by Allegro, a strategic, metric-driven approach can lead to significant enhancements in customer experience and business outcomes.

Sources:

https://www.tidio.com/blog/customer-success-metrics/

https://www.zendesk.com/blog/ai-customer-service/

https://blog.hubspot.com/service/customer-service-stats