As artificial intelligence (AI) becomes more sophisticated, the potential implications for business become more varied and complex. One of the most important issues to consider is how to ensure that AI development is ethical and responsible. Unfortunately, many businesses are paying little attention to AI’s ethical implications in their haste to capitalize on this transformative technology. This lack of diligence could have disastrous consequences for both individual companies and the economy as a whole. In this article, we will explore the business risks of disregarding ethics in AI.

The Business Risks of Poor AI Ethics and Governance

The artificial intelligence (AI) revolution is well underway, and businesses are rushing to adopt AI technologies in an effort to stay competitive. However, as AI technologies become more ubiquitous and powerful, there is a growing need for ethical considerations around their use. Unfortunately, many businesses are falling short in this area. In 2015, Amazon found that the AI in their recruiting software favored hiring male candidates. While this turned heads and raised eyebrows regarding AI, the same mistakes still happen today. In the Fall of 2021, the AI that recommends content on Facebook recommended videos of primates to users that viewed a video from a British tabloid featuring Black men.

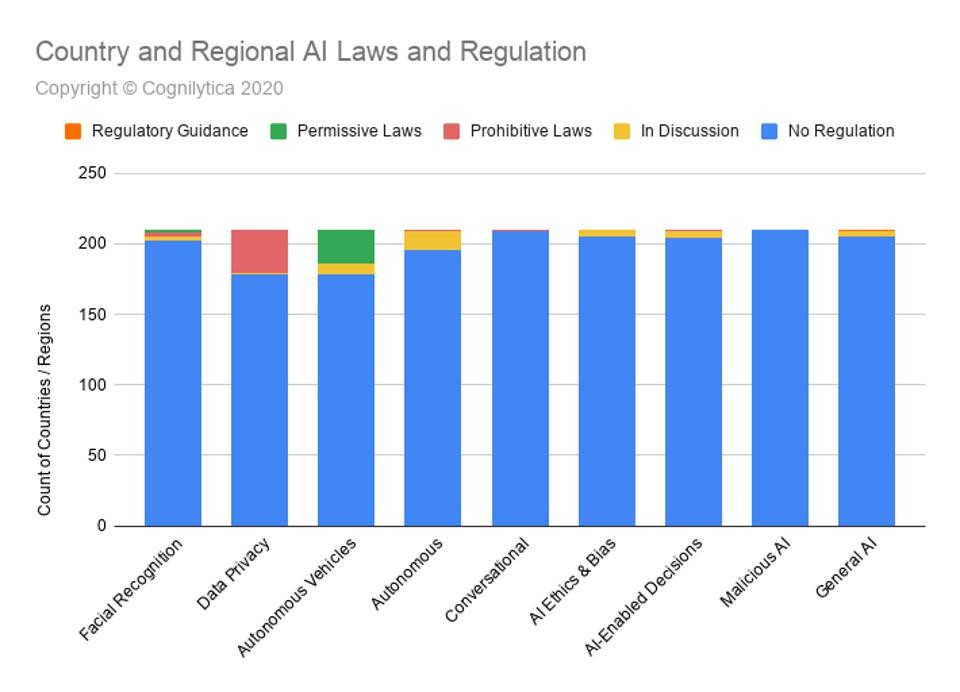

According to the chart above, laws and regulations regarding AI ethics and bias are still being discussed despite the shortfalls of numerous tech companies. However, this graph also displays the regulatory action already taken against other areas of AI. Laws surrounding AI ethics will develop as AI becomes more powerful and prevalent in businesses worldwide. Therefore, there are a number of risks associated with ignoring ethics in AI.

Regulatory Action

First, there is the risk of regulatory action. As AI technologies become more prevalent, governments will likely introduce regulations to oversee their use. If businesses are not proactive in ethical AI practices, they could find themselves at a competitive disadvantage. According to the Harvard Business Review, the Federal Trade Commission (FTC) released an uncharacteristically bold set of guidelines on “truth, fairness, and equity” in AI — defining unfairness, and therefore the illegal use of AI, broadly as any act that “causes more harm than good.” These guidelines are still in the early stages, but they signal a new era of government regulation around AI globally. Following these FTC guidelines from 2021, the European Commission released its own set of rules for equity and ethics in AI. The Commission’s proposal includes a requirement for businesses to conduct “impact assessments” of AI systems, with a particular focus on issues of equality and non-discrimination.

This regulatory pressure is only going to increase in the coming years as AI technologies become more embedded in society. Businesses that don’t proactively address ethical AI concerns could find themselves at a competitive disadvantage or face fines and penalties.

Reputational Damage

Second, there is the reputational risk associated with unethical AI practices. In recent years, we’ve seen a number of high-profile companies come under fire for their use of AI. In the Cambridge Analytica scandal of 2015, Facebook used the protected personal information of its users for political advertising. From this incident, Facebook lost $35 billion in market value and is still a largely untrusted platform by the American public. If a business is caught using AI in an unethical way, it could suffer severe reputational damage.

This reputational damage can have a significant impact on a business’s bottom line. A study by the University of California, Berkeley found that a company’s stock price drops an average of 0.93% on the day that it is embroiled in a scandal. In addition, these companies see a long-term decrease in their stock price of about 12%. The study also found that it takes an average of three years for a company’s stock price to recover from a scandal. The reputational risks associated with unethical AI practices are simply too high for businesses to ignore.

Loss of Competitive Advantage

Third, there is the risk of losing a competitive advantage. As AI technologies become more commonplace, those businesses that are able to use them effectively will have a significant advantage over their competitors. However, if a business is seen as unethical in its use of AI, it could lose its competitive advantage. This is because customers and clients will increasingly be looking to do business with companies that they trust to use AI ethically.

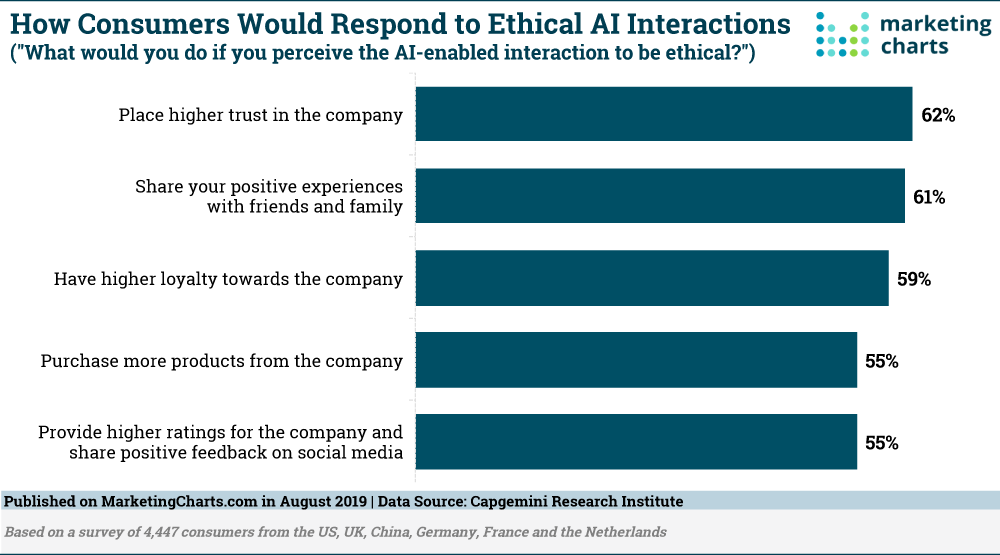

The graph above shows that consumers would respond positively to companies that use AI ethically. Therefore, a business can boost its customer retention rate by considering the ethical implications of the AI they implement. In a survey of 1000 consumers in the United States, the UK, and Germany, 86% said they would be willing to pay more for products and services from companies that are committed to ethical AI practices. Furthermore, 89% said they would boycott a company if it was revealed to be using AI unethically.

It’s clear that businesses need to be aware of the ethical implications of their AI practices. Those that don’t could find themselves at a competitive disadvantage, facing reputational damage or even government regulation.

Civil Liability

Finally, there is the risk of civil liability. If a business’s AI technology causes harm to individuals, the business could be held liable in a civil lawsuit. This is a particularly relevant risk given the recent advances in facial recognition technology. If a business uses facial recognition technology in an unethical way, it could be sued for invasion of privacy or defamation.

Cases of civil liability are already beginning to emerge. In May of 2017, a woman in the United States filed a lawsuit against Facebook after the company’s facial recognition software misidentified her as a terrorist. The woman, who is of Middle Eastern descent, was detained by the FBI as a result of the error. Furthermore, Google is currently facing a class-action lawsuit in the U.K. for a health data breach that broke the U.K. data protection law. Google’s DeepMind AI system used the health information of millions of patients within an app without their consent. Cases like these are likely to become more common as AI technologies become more widespread. Businesses need to be aware of the potential for civil liability and take steps to mitigate this risk.

Conclusion

These are just a few of the business risks associated with poor AI ethics and governance. Businesses that want to stay ahead of the curve need to make sure they are taking these risks seriously.

An artificial intelligence (AI) revolution is well underway, and businesses are rushing to adopt AI technologies in an effort to stay competitive. However, as AI technologies become more ubiquitous, there is a growing concern about the ethical implications of their use. Businesses that don’t proactively address these concerns could face serious risks, including regulatory action, reputational damage, loss of competitive advantage, and civil liability.

References

https://www.forbes.com/sites/cognitiveworld/2020/02/20/ai-laws-are-coming/?sh=239a0acca2b4